This is the status of a work in progress currently hosted localy on my computer.

Last content + text update: 14/06/2021 see wiki

The Internet browser has several affinities with the device of the painting, playing both the role of a frame and that of an "open window" on the representation" ref ___

I want to create a Web instrument using responsive design and Web events in order to modulate sounds in playful ways. Once this instrument is made, the idea would be to gather a few computer users together and make them perfom as an orchestra.

Easy of use and access (no download, no output)this instrument wishes to give to any Web user the possibility to play with sound by interacting with their own device and interface. Like with any other instrument, it is possible to play some notes, but trickier to compose with it. In a natural process, the more you play with a instrument, the better you will understand it's mechanics and play with them. (ex: moving the mouse in Y range affects the frequence, moving the mouse in X range affects the range, resizing the page affects the echo, clicking several times creates a BPM, possibilities are quiet endless but you can only understand this by trying out things). Instead of giving a set of intruction on the page itself, I wish to make this page as empty as it can be, just like the body of an instrument.

Both aestheticaly and technicaly, I get inspired from an important element being part of most wind or string instrument called the resonance chamber, it basicaly "uses resonance to enhance the transfer of energy from a sound source (e.g. a vibrating string) to the air." I like the idea of imagining the canvas as a resonance chamber, because it accentuates the role of the format itself.

In that order of ideas:

- the smaller is the canvas the more short and high can get the the sound

- the bigger is the canvas the more long and low can get the sound

As a side note, not only electronic instruments can play a continuous sound, the bagpipes for example play continuously a "bourdon" by constantly filling in and spreading out air from the bag. As for many other instrument, a permanent movement is necessary to create sound.

As mentionned previously, I would like the canvas to remain quiet minimal; because I think about it as a resonance chamber. However I choosed to implement a grid for some reasons that I will explain. But first of all I need to credit the original version of this grid, before I started to transform it.

- 1st: give the user a graphic marker for her/he/it to be able to know what she/he is doing and get a sense of the spatialization of the sound.

- 2nd: give the user a playful visual imput reacting to the sound and user interaction. It is inspired by diffraction/reflection notions I am investigating and confronting.

" Each bit of matter, each moment of time, each position in space is a multiplicity, a superposition/entanglement of (seemingly) disparate parts." (Diffracting Diffraction Cutting Together - Karen Barad).

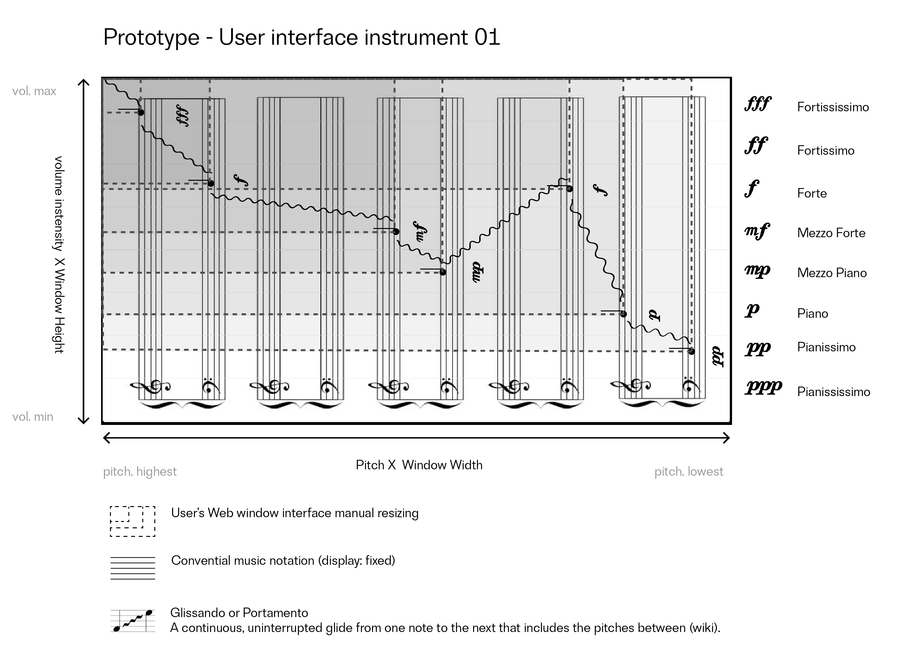

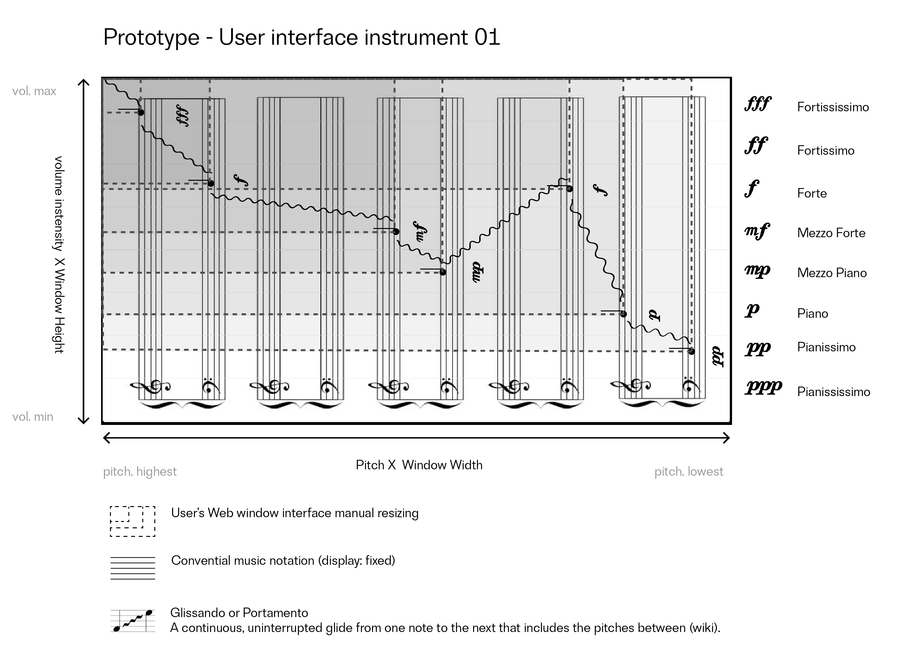

First shema of a incoming prototype attemting to corellate sound pitch and release with window heihgt and weight. This shema is base on conventional musical notation before being translated into a second more analog musical notation.

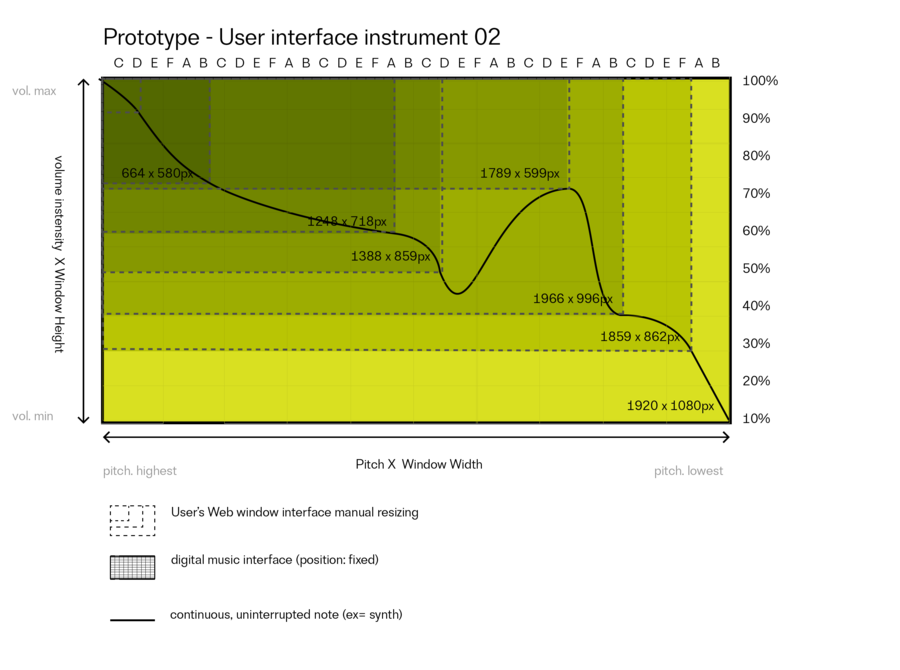

In this second sketch, I make a step from analog to a more digital musical interface.

- As always Jonas Lund Net Artwork about the user interface What you see is What you get is an important starting point as it "actually consists of a device that saves the resolution of the website’s different users’ screens and reproduces them in chronological order by display of rectangles of corresponding sizes."

- Also a usual reference is Kandinsky's Concerning the Spiritual in Art which compared colors shades to musical instruments

- From Kandinsky too, Point and Line to Plane theorizing tensions create by basic shapes (such as point and a line) within a square format (plane).

- (Way) more recently is Cascade from Raphael Bastide is quiet interesting, it suggests a instantaneous/live reaction between sounds and visual web design, and it is a playtool. Here, you can to play with any kind of values from the CSSa nd create new soudning objects depending on the values you gonna change. It is a soundkit that requires the user to connect a MIDI outpout and inspect the page in order to add/modicate CSS values in live.

- What is the agency of the different elements in a responsive design?*

- How do they intra-act?*

- What is supposed to be flexible, and what is actually fixed?*

- What can change beyond preset boundaries?*

- Why is there a systematic use of grotesque typography?*

- Are you trying to reach some kind of default aesthetics?*

- What about customizing this range of possibilities instead of keeping the same visual aspect?*

- Be careful to do not get stuck in a loop about this all subject of Web perspective, where are you trying to go with that, or what are you trying to say?*

About modernism and post-modernism The Communist Designer, the Fascist Furniture Dealer, and the Politics of Design

I choosed to use P5.JS and P5.sound libraru in order to manage both audio and visual aspects and make them (seemingly or not) react to each others.

As seen in my two early sketches 12, it is all about mapping the sounds inside the (everchanging) canvas dimensions.

I am right now working with frequency (for windowWidth and mouse X) and amplitude (for WindowHeight and mouse Y).

I define the user interface window height and width as the range for the frequency and amplitude of the sound. In other words, you can make the canvas bigger or simply unzoom to make these ranges wider and allow a bigger spectrum the sounds.

By using basic interactionsoffered by P5.js, I want the cursor/mouse position (mouseX and mouseY) to fragmentate (or diffract?) the background into a grid around the cursor. The notions of reflection and diffraction (see Diffraction vs Reflection & Diffracting diffraction, which I still need to dig way more into: are already and will remain closely connected to the way this prototype will evolve (08/06/2021).

I would like it to be fluid, which requires a certain ratio of squares displayed depending on the canvas size. Having too many square will slow down the frame rate and logicaly the sound too. Then, it won't be continuous anymore, which is also interesting.By doing so, and in addition to some work on the light and overlays, I want to the interface to react to the user's interaction while remaining very abstact.

First, I add an oscillator, which doesn't offer yet so many differents sounds, but allow quiet some freedom in terms of amplitude; frequency; pan; phase; multiplication; and scaling. (all references to be found here).

screenshot 1 [08/06/2021]

screenshot 1 [08/06/2021]

screenshot 2 [08/06/2021]

screenshot 2 [08/06/2021]

screenshot 3 [08/06/2021]

screenshot 3 [08/06/2021]

screenshot 4 [08/06/2021]

screenshot 4 [08/06/2021]

Looking at Oscillator Frequencyand trying out frequency symetry together on SyncFiddle.

Having already one oscillator being set up in the code with one waveform, we copied the oscillator and pasted it in the same sketch but inverted the values of the second sound (amplitude and frequency) in order to get it symetrical with the first sound.

First oscillator (original)

let freq = map(mouseX, 0, width, flow, fhigh); osc.freq(freq);

let amp = map(mouseY, 0, height, ahigh, alow); osc.amp(amp);

Second oscillator (inverted)

let freq2 = map(mouseX, 0, width, fhigh, flow); osc2.freq(freq2);

let amp2 = map(mouseY, 0, height, alow, ahigh); osc2.amp(amp2);

Folowing this, I wanted to see how it could look like if I reimplement a wave form and add another one in the same symetry idea. I tried all combinations and found some interesting reflecion/refraction waves possibilities.

SinOsc+SqrOsc[09/06/2021]

SinOsc+SqrOsc[09/06/2021]

SinOsc+TriOsc[09/06/2021]

SinOsc+TriOsc[09/06/2021]

SqrOsc+SawOsc[09/06/2021]

SqrOsc+SawOsc[09/06/2021]

TriOsc+SawOsc[09/06/2021]

TriOsc+SawOsc[09/06/2021]

TriOsc+SqrOsc[09/06/2021]

TriOsc+SqrOsc[09/06/2021]

With the help of an online tone generator, and by displaying mouse X and Y values directly on the canvas, I try to get the cursor on the good position in order for the sound to macth with each tone of an entire octave (C4, D4, E4, F4, G4, A4, B4).

Then I bookmarked the tones, and implemented them with a simple mouseOver interaction.

Not Tuned[09/06/2021]

Not Tuned[09/06/2021]

Tuned on C4♯[09/06/2021]

Tuned on C4♯[09/06/2021]

I find interesting that a cookbook is pre-requiring quiet some elements even before starting the recipie, you need to have all or most ingredients; be familiar with the metric system, have some specific cooking tools, and sometimes even, you'll need some special skills that only an experimented cooker would have. All together, they from a set of condition that will allow you to make the recipie correctly.

With this instrument, it is a bit the same. Remember that the size of the canvas is affecting everything you hear, because resizing the canvas would result to sketching the audio range. You could of course make some music with your own specific canvas size, but if you want this visual assistance to be of any help, you'll obviously need to set the screen to the exact same size in which the instruction have been created. Here the size of the window which allowed to create these bookmarks was of 1280x690px (WxH)

Following this step, it could be possible to tune in on a song more easily, but also to start composing with some more imput. I will look for a song that would be easy to tune on, and if it works, create both audio and visual instructions for it.

As a preview of the upcoming broadcast n°8, just a small demonstration. In this audiio file, I settle the screen size at 1280x690px and tune on C4, D4, E4, F4, G4, A4, B4.

"Set your screen size at X value 1280 pixels, Y value 690 pixels."

(Turning on oscillator)(Tuning on C4)

(mouseposition) "C4, X value 175 pixels, Y value 434 pixels."

(Tuning on D4)

(mouseposition) "D4, X value 209 pixels, Y value 373 pixels."

(Tuning on E4)

(mouseposition) "E4, X value 246 pixels, Y value 329 pixels."

(Tuning on F4)

(mouseposition) "F4, X value 263 pixels, Y value 222 pixels."

(Tuning on G4)

(mouseposition) "G4, X value 311 pixels, Y value 148 pixels."

(Tuning on A4)

(mouseposition) "A4, X value 352 pixels, Y value 69 pixels."

(Tuning on B4)

(mouseposition) "B4, X value 424 pixels, Y value 54 pixels."

(Tuning on B3)

(mouseposition) "B3, X value 145 pixels, Y value 541 pixels."

(Turning off oscillator)

"Thank you for listening... Next week, tune in for the recording of Nocturne in C Sharp Minor (No. 20) Arrangememt for Web oscillator."

Instructions, part of the cookbook for broadcast 7[10/06/2021]

Instructions, part of the cookbook for broadcast 7[10/06/2021]

Original

Arr. for Theremin & Piano

Nocturne in C-Sharp Minor, B. 49 (Arr. for Theremin & Piano) · Clara Rockmore)

Now I gonna play Nocturne in C-Sharp Minor, B. 49 (Arr. for Theremin & Piano) · Clara Rockmore) and try to tune on the notes. Then play it again and again until I can quiet accurately follow the musical composition. Then I will record.

12/06/2021

12/06/2021  12/06/2021

12/06/2021

I found out that every tone matches with an exact sound frequency (Example 440hz is corresponding to A3).

Helpful because also p5.js event detector allows to display both sound frequency and mouse X position on the screen at the same time, so I could get the exact position matching with every tone and draw vertical line for each of them!

It the becomes way easier to tune on a track/song and understand what notes are being played! I am training as much as I can to play over Nocturne in C Sharp Minor (No. 20), and results are getting better and better.[12/06/2021]

[12/06/2021] Right now I am working more on sound itself, by pushing futher what we did with Michael about frequency symetry / reflection / etc. Result are very interesting when for example trying to combine sine and square oscillations together. I am right now combining 5 frequencies in an almost mathematical way in order for the oscillator to sound more interesting. Soon to be continued.

Once the instrument is working, the idea would be to set a group of users with their computers (preferably different kind of computers) together pretty much as a musical orchetra. Each user deals its own instrument, and so with a single note. Each (by default) window dimension release a continuous sound when being opened. The performative exercice would then be to tune them together, exactly as would do a philarmonic orchesta before performing all together a musical composition.

Here is a basic audio example of what I imagine being replicated with my instrument.

It is possible to do that with OBS and Blackhole. 14/06/2021

I just put the a few useful screenshot for when I should do this setup again

14/06/2021

14/06/2021  14/06/2021

14/06/2021  14/06/2021

14/06/2021  14/06/2021 ___

14/06/2021 ___

- Go as far as possible in terms of sound possibilties, and give to the user a instrument related to its browser or computer system (use detector)

- Generate a BPM when mouseClicked?

- Cross-read and annotate in diffraction/reflecion field