This is some sort of devlog from the Summer Session residency at V2. Object Oriented Choreography got selected from Sardegna Teatro and we were sent in the Netherlands* to work for the whole summer**.

* actually i was already here

** actually my plan for the summer was really different but what can i do

Take everything as a WIP because it's literally that

Workshop?

The third iteration of OOC could be two-parts workshop: in the first moment participants co-design a VR environment with a custom multiplayer 3D editor. The second half is a dance workshop with a choreographer that guides and explores with the participants the virtual reality they created altogether. The VR headset is passed hand by hand as a catalyst, transformed from a single-user device to a wandering and plural experience.

-

design the VR environment together

- to encode information with spatial and body knowledges

- to achieve meaningful and expressive interactivity through participation

-

to take into accounts multiple and situated points of view

-

starting point is the essay on the Zone written for the previous iteration

- the essay

- excerpts used as prompt, focus on using space as an interface with the body

-

explore the collective VR environment

- to decode information with spatial and body knowledges

- to transform vr in a shared device

- who is inside the VR trusts the ones outside

-

the ones outside the VR take care of who's inside

-

performative workshop

- stretching and warming up

- excercises: moving with hybrid space

- improvisation

Outcomes:

1. documentation of the workshop

2. a different 3d environment for each iteration of the workshop i.e digital gallery ?

3. the 3D editor

first part - design the VR environment

-

how? with a custom 3D editor

- a kind of tiltbrush?

- super simple editor, limited functionality

- work with volumes, maybe images (texture)? maybe text?

-

how do we deal with the multiplayer aspect ?

- how do we deal with the temporality of creation?

- how can participants collaborate together if there is only 1 VR system?

-

take into acocunts that our VR system is:

- headset

- 2 controllers

- 3 motion trackers

-

think to collaborative uses of these six pieces of hardware

- mixed editor ?

- accessible from outside the vr system ?

- like a web interface and a vr interface ? multiplayer with different kind of access and functionality ?

- like vr for the volumes and web for images and text ?

-

how techically ?

-

VR interface

-

for modelling volumes: dynamic meshes with marching cubes

-

for UI: https://github.com/ocornut/imgui

-

Web interface

- three.js

- vue.js

-

-

references

-

see

- terraforming - sebastian lague https://www.youtube.com/watch?v=vTMEdHcKgM4, for volumes modelling

- my inner wolf - studio moniker https://studiomoniker.com/projects/myinnerwolf, for the multiplayer work with images and textures

what's the plan:

- transition from performance to workshop

- participative forms of interaction ?

- simplify what's there already

what's the point?

-

make sense together of a complex, contradictory system such as : the massive digital infrastructure

-

what does it mean: to make sense together? to accept the limits of our own individual description and join others to have a better view (renegotiation of complexity?)

what are our roles here?

- facilitators?

- to provide some tools and a context around them

- which kind of tools

Mapping the algorithm

Our technological environment is made of abstract architectures built of hardware, software and networks. These abstract architectures organize information, resources, bodies, time; in fact they organize our life. Yet, they can be really obscure and difficult to grasp, even to imagine.

Within VR we can transform these abstract architecture into virtual ones: spaces that are modelled on the nature, behaviour, and power relations around specifc technologies. Places that constraint the movements of our body and at the same time can be explored with the same physical knowledge and awareness.

Starting from one specific architecture we model and map it together with the public.

This iteration of OOC is a performance with the temporality of a two-parts workshop: in the first moment participants model together the virtual environment with a custom VR editor, that let them create the space in 1:1 scale.

The second half is a performative workshop with a choreographer that guides and explores with the participants the virtual reality they created altogether. The VR headset is passed hand by hand as a way to tune in and out the virtual space, transformed from a single-user device to a wandering and plural experience.

Since an abstract architecture is composed of several entities interacting together, the dramaturgical structure con be written following them. The narration of the modeling workshop as well as the performative excercises from the warming up to the final improvisation can be modeled on the elements of the architecture.

~

The idea of having the public modeling the space and exploring with the performer responds to several needs:

- a virtal space is better experienced first hand

- meaningful and expressive forms of interaction

- making sense together of black box algorithms

- participants are aware of what's happening inside the VR and so there is no need for other visual support

To make an example: the first OOC was modeled on a group chat. The connected participants were represented as clients placed in a big circular space, the server. Within the server, the performer acted as the algorithm, taking messages from one user to the other.

Could it be done in a different way?

Here are three scenario:

Workshop

- a two-parts workshop: in the first moment participants co-design a VR environment with a custom multiplayer 3D editor. The second half is a dance workshop with a choreographer that guides and explores with the participants the virtual reality they created altogether. The VR headset is passed hand by hand as a catalyst, transformed from a single-user device to a wandering and plural experience.

- it has a particular temporality: it is not intense as a performance and the pace can be adjusted to keep everyone engaged.

- it follows the idea of lecture performance, steering toward more horizontal and collaborative way of making meaning

- it provides facilitation

- cannot be done the same day as the presentation, at least a couple of days before (less time for reharsal)

Installation:

-

There is the VR editor tool and the facilitation of the workshop is recorded as a text (maybe audio?), participants con follow it through and create the evironment while participating. the text is written with the choreographer / performer. it's a mix between the two moments of the workshop. the performer is following the same script.

-

vr is used as a single player device, intimate experience, asmr or tutorial vibe

- probably doable up to two or three people at the same time? ( should try )

- a platform to see different results?

- how long should it be to be meaningfull for the public? at least 10 min? 15 min ?

Platform:

- interactions and performance happen in different moments

- user generated contents

- we gather contents online and use it to build the stage for the performance

- there is a platform in which people can build space? does it make sense if it's not done with vr things ? aka involving directly the body?

A draft timetable

-

week 1 _ 18-24 jul

- define concept

- draft scenario

- define process

- schedule and timetable

- plan outcomes

- presentation (with visual ref and examples)

-

week 2 _ 25-31 jul

- research and writing for workshop

- technical setup research for editor

- vr editor research and experiments

-

understand logistic for workshop moments?

-

25 - 26:

- workshop research

- book of shaders

-

27

- book of shaders

- setup vr editor basic

-

28

- workshop research / writing

- book of shaders

- setup vr editor prototype

-

29

- workshop research / writing

- book of shaders

- setup vr editor prototype

-

30~31 buffer

- meeting with sofia

- meeting with ste \& iulia

- update log and sardegna

-

week 3 _ 1-7 aug

- first workshop text draft

- first working prototype for vr editor

- setup reharsal

-

week 4 _ 8-14 aug

- week 5 _ 15-21 aug

- week 6 _ 22-28 aug

- week 7 _ 29-4 sep

- week 8 _ 5-8 sep

Sparse ideas

Tracker as point lights during performance (see FF light in cave)

References

- The emergence of algorithmic solidarity: unveiling mutual aid practices and resistance among Chinese delivery workers, read

- Your order, their labor: An exploration of algorithms and laboring on food delivery platforms in China, DOI:10.1080/17544750.2019.1583676

- The algorithmic imaginary: exploring the ordinary affects of Facebook algorithms, DOI:10.1080/1369118X.2016.1154086

- Algorithms as culture: Some tactics for the ethnography of algorithmic systems - read

- Redlining the Adjacent Possible: Youth and Communities of Color Face the (Not) New Future of (Not) Work read

An overview for Sofia:

notes from 02/22

- focus on how the space influences the body

- public dont need to look at the phone all the time

- interaction from the public change the space

- performer not all the time inside the vr, there could be moments outside (ex. intro, outro)

- focus on drammaturgical development

- participant should recognize the results of their own interactions

- public need to see what the performer see (or to have another visual support) -> projection?

concept

Our technological environment is made of abstract architectures built of hardware, software and networks. These abstract architectures organize information, resources, bodies, time; in fact they organize our life. Yet, they can be really obscure and difficult to grasp, even to imagine.

Being in space is something everyone has in common, an accessible language. Space is a shared interface. We can use it as a tool to gain awareness and knowledge about complex systems.

Within VR we can transform these abstract architecture into virtual ones: spaces that are modelled on the nature, behaviour, and power relations around specifc technologies. Places that constraint the movements of our body and at the same time can be explored with the same physical knowledge and awareness. (like what we did for the chat)

Starting from one specific architecture (probably the food delivery digital platforms typical of gig economy that moves riders around) we model and map it together with the public. Since an abstract architecture is composed of several entities interacting together, a strong dramaturgical structure con be written following the elements of the architecture.

how to - two options

- performance as a workshop

a performance with the temporality of a two-parts workshop: in the first moment participants model together the virtual environment with a custom VR editor, that let them create the space in 1:1 scale.

Then a performative workshop with a choreographer / performer that guides and explores with the participants the virtual reality they created altogether. The VR headset is passed hand by hand as a way to tune in and out the virtual space, transformed from a single-user device to a wandering and plural experience.

- performance as an installation

The VR editor is used as an installation. Other than the normal functionalities to model the environment it contains a timeline with the structure of the workshop recorded as audio. The performer activate the installation following the script. The text is written with the choreographer / performer. it's a mix between the two moments of the workshop version descripted before. After the performance, participants (up to three at the same time) can follow the audio it and being guided in the creation of the environment.

~

Both options can be activated multiple times, with different results. The resulting 3D environments can be archived on a dedicated space (like a showcase website) in order to document, (communicate, and $ell the project again for further iterations)

___..._

_,--' "`-.

,'. . \

,/:. . . .'

|;.. . _..--'

`--:...-,-'""\

|:. `.

l;. l

`|:. |

|:. `.,

.l;. j, ,

`. \`;:. //,/

.\\)`;,|\'/(

` `itz `(,

BREAKING CHANGES HERE

Meeting with Sofia and Iulia

ok ok ok no workshop let's stick to what we have and polish it

- interaction from the public change the space

- facilitate access to the website

- website: intro, brief overview

- at the beginning the performer is already there, idle mode

- (vertical) screen instead of projection ?

- from the essay to something more direct

~

- building block:

- text

- interaction

- space modification

- - [ ] - - [ ] - - [ ] - ->

what do we need:

- timeline

- model for the application, a series of blocks like this:

* text

* duration

* interaction

* scene

28/7 - Prototype setup

app design

-

vvvv client

- VR system

- timeline

- scenes

- text

- interaction

- space

- soundtrack

- scenes

- cms

-

web client

- pages

- main

- interaction

- sound notification

- about

- main

- i11n

- pages

-

web server

- websocket server

small prototype:

- vvvv can send scenes to the server

- datatype:

- text

- interaction

- type

- counter

- xy

- context

- description

- etc

- type

- datatype:

29/7 - Prototype Setup \& other

The building block is the Stage. Each stage is a description of what's happening at the edge of the performance: what the screen is displaying, what's inside the VR, what's happening on users' smartphone.

We can place a series of stages on a timeline and write a dramaturgy that it's based on the relation between these three elements.

The model of the stage is something like this:

- text

- scene

-

interaction

- type

- context

-

textis the text that is gong to be displayed on the screen scenecontains some info or setup for the scene in the vr environmentinteractionholds the type of the interaction and other additional info stored as a context

text and scene are meant to be used in vvvv to build the vr environment and the screen display

interaction is meant to be sent via websocket to the server and from there to the connected clients

it could be useful to keep track of the connected users.

It could be something like:

- when someone access the website a random ID is generated and stored in the local storage of the device, in this way even if the user leaves the browser or refresh the page we can retrieve the same ID from the storage and keep track of who is who without spawning new user every time there is a reconnection (that with ws happens a lot!)

- maybe the user could choose an username? it really depends on the kind of interaction we want to develop. also i waas thinking to ending credits with the participation of and then the list of users

- when connecting and choosing and username, the client sends it to the server that sends it to vvvv, that stores the users in a dictionary with their ID. Every interaction from the user will be sent to the server and then vvvv with this ID, in this way interactions can be organized and optimized, as well linked to the appropriate user.

- tell me more about surveillance capitalism

about text - interaction

even if we can take out excerpts from the essay we wrote, this reading setup is totally different. here our texts need to be formulated like a call to action, or a provocation to trigger the interaction.

a way to acknowledge the public

31/07 - Prototype setup: vvvv

The websocket implementation im using is simple. It just provides this kind of events:

- on open (when a client connect)

- on close (when a client disconnect)

- on message (when there is a message incoming)

In order to distinguish between different types of message I decided to serialize every text as a JSON string with a field named type. When a message event is fired the server goes and look at the type of the message and then acts consequently. Every message triggers a different reaction aka it calls a different function.

In the previous versions the check on the message type was a loong chain of if statements, but that didn't feel right, so I searched a bit how to manage it in a better way.

In the server (node.js) i created an object that uses as keys the type of the message and as value the function associated. javascript switch object

For vvvv I asked some suggestion in the vvvv forum and ended up using a simple factory pattern that uses a common interface IMessage and then use it to process the incoming message based on the type. replacing long if chain

In order to deal with the state of the application (each message operate in a different way and on different things) I created a Context class that holds the global state of the performance such as the websocket clients, and the connected users. The IMessage interface take this context as well as the incoming message and so it can operate on the patch.

happy with it! it's much more flexible than the long if snake

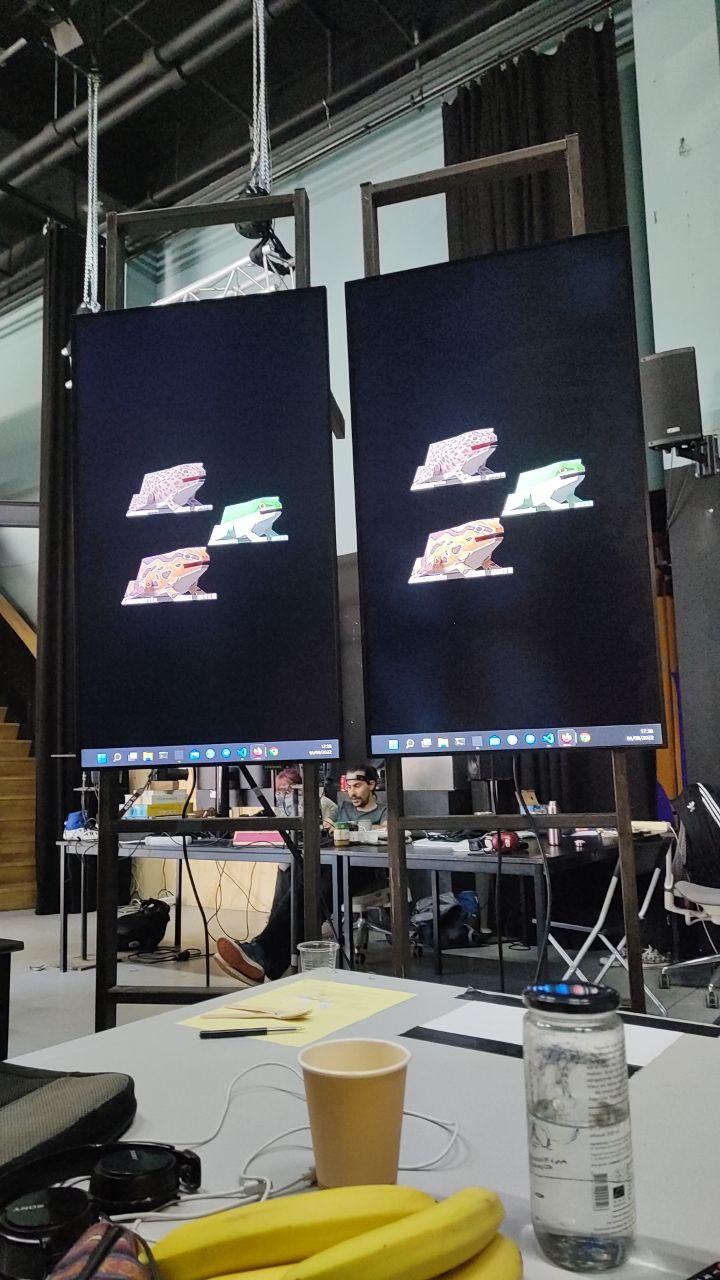

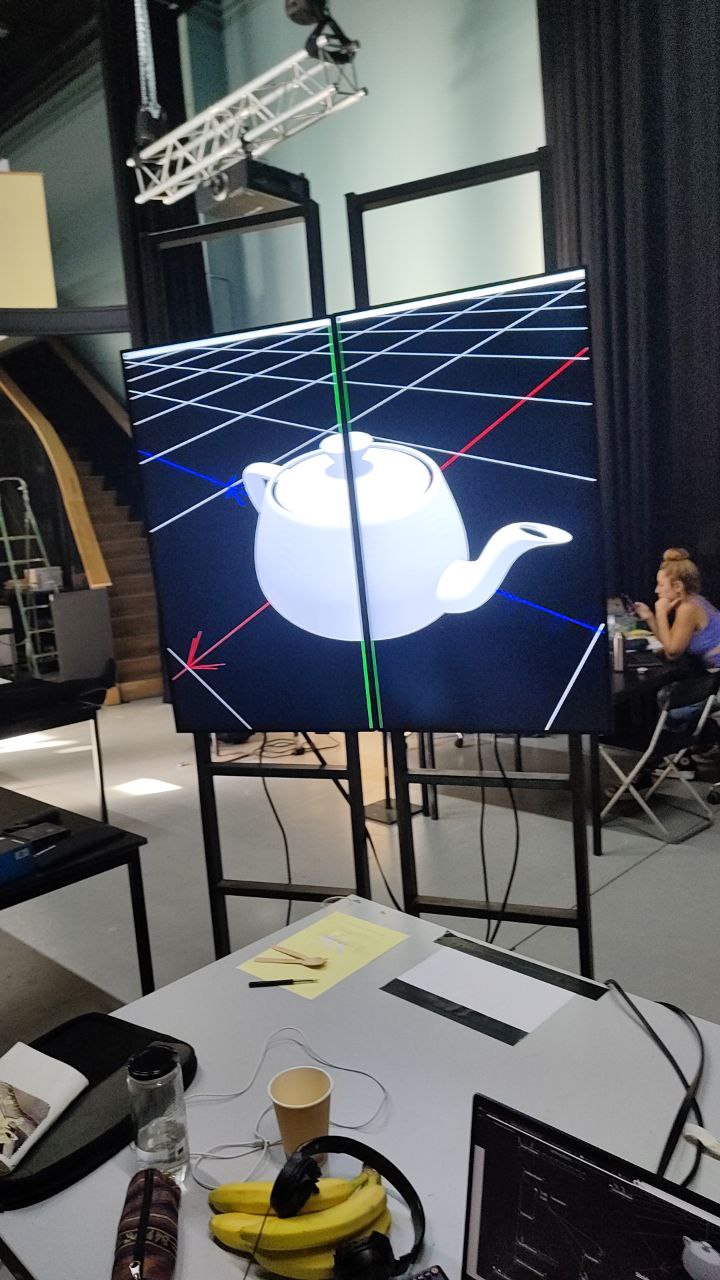

1-2/08 - two Displays & Prototype setup

Yesterday together with Richard we setup the two screens to show what's happening inside the VR for the public. Initially they were mounted next to each other, in vertical.

With Iulia we thought how to place them. Instead of keeping them together probably it would be better to use them at the edge of the interactive zone. Even if the screen surface seems smaller, it's a creative constraint \& it creates more the space of the performance.

Ideallly the viewer can see at the same time both screens and the performer. The screens can display either the same or different things.

And now some general thoughts:

the username should be central in the visualization of the interaction, since it's the main connection point between between whats happening outside and inside? could it be something different than a name? could it be a color? using a drawing as an avatar?

![]()

types of interaction

the idea of presence, of being there, together and connected

touching the screen <---means to be connected with ---> the performer

keep touching to be there

a light in the environment

and when the performer gets closer to the light the connected phone plays a notification

maybe it could be enough ?

just use the touchscreen as a pointer xy and make the nature of the pointer changes

3/08 - Prototype Setup and doubts

Finished to setup the xy interaction with the clients and vvvv.

The setup with nuxt is messy since it's stuck between nuxt 2 and vue 3. There are a lot of errors that don't depend on the application but rather to the dependencies and it's really really annoying, especially since it prevents solid design principles.

I'm tihnking to rewrite the web app using only Vue, instead of nuxt, but im a bit afraaaaaidd.

4/08 - Script

Im trying to understand which setup to use to rewrite the application without nuxt. Currently im looking into fastify + vite + vue, but it's too many things altogether and im a bit overwhelmed.

So now a break and let's try to list what we need and the ideas that are around to organize the work of the next week.

Hardware Setup:

- 2 vertical displays, used also as vive basestation support, place at opposite corners of the stage

- PC with Vive connection in the third corner

- Public stands around

Performance Structure

0. before the performance

-

the two screens loops:

- Object Oriented Choreography v3.0 (with versioning? it's funny)

- Connect to the website to partecipate to the performance

- o-o-c.org

-

website:

- access page (choose a name or draw a simple avatar)

- waiting room with short introduction: what is this and how does it work. In 2 sentences.

-

stage:

- performer in idle mode, already inside the vr

- user connected trigger minimal movements?

-

sound:

- first pad sloowly fade in ?

1. performance starts, first interaction: touch

-

two screens:

- direct feedback of interaction

- representation of the space inside the vr

- position of the performer inside the vr (point light?)

-

website:

- touch interaction. users are invited to keep pressed the touchscreen.

- a sentence to create context aroud the interaction? maybe not, because:

- to interact the user doesn't need to look at the phone, it's more an intuitive and physical thing

-

stage:

- performer st. thomas movement to invite for the touch interaction

- the public is invited to follow the performer ? (for example releasing the touch improvisely, some kind of slow rythm, touch pattern, explore this idea as introduction?)

-

sound:

- ost from PA

- interaction from the phones

-

interaction:

- every user is an object in the VR space, placed in a random-supply-chain to build a meaningful space for the choreography. the object is visible only when the user is touching the screen.

- the performer can activate these objects by getting closer

- when an object is activated it sends a notification to the smartphone of the user, that play some sound effect

-

build on this composition

-

bonus: the more the user keep pressed, the bigger the object grows? so it's activated more frequently and this could lead to some choir and multiple activations at the same time?

[]need a transition[]

2. second interaction: XY

-

two screens:

- one screen show the representation of the space seen from the outside, kinda aerial view

- one screen show focus on one user at the time

- for object to go aroud think to the kind of animations of everything for example

-

website:

- touch xy interaction

- double tap to recognize which one are you? with visual feedback like hop. maybe not necesary

-

stage:

- for sure the beginning of the interaction will be super chaotic, with everyone going around like crazy.

- the goal could be to go from this initial chaos to some kind of circular pattern, that seems the most iconic and easy thing

- the performer invites to circular movements, growing in intensity.

- actually this could be a great finale, using the same finale of the last iteration

-

sound:

- ost from PA

- focus notification (the smartphone rings when the user is in focus on the screen)

-

interaction:

- users are invited to use the touchscreen as a trackpad, to move into the space.

- how not to be ultra chaotic from the start? or:

- how to facilitate this chaos toward something more organic

would be nice to have a camera system that let you position the camera in preview mode and then push it to one of the screens, overriding the preset

5-08

Notes from the video of OOC@Zone Digitali. The name of the movements refer to the essay triggers.

list of triggers:

-

Is performer online?

is great for the beginning. It could start super minimal and imperceptible, transition from the idle mode to the beginning of the performance, with slowly increasing intensity -

San Tommaso, Janus

StThomash could be an opening, for an explicit invitation to the touch interaction. hold the position and insist.also

Janus' looking around and searching could be the reaction when someone connect and is placed in the space. When someone touch, look at them in the virtual place and then stay. it's a first way to create the impression of the environment that surround the performer

↓↑ -

Fingertips, Scribble

are a good way to elaborate on the idea of touch interaction. focus on fingers as well as focus on the surface those fingers are sensing. Bring new consistency to the touchscreen, transform its flat and smooth surface to something else. -

Perimetro, Area

Nice explorative qualities. Could be used for notification composition during the first interaction? After the invitation, a moment of composition.

~

-

Tapping, Scrolling

floor movements for a second part ? between interaction touch and xy -

Logic & Logistic, Efficiency

Stationary movement that could introduce the performer point of view. The body is super expressive and the head is still, so the point of view in the VR is not crazy from the start. -

Knot, Velocity

The stationary movement could then start traversing more the space, integrating also the quality and intensity of efficiency and velocity.

~

- Scrolling

could be used during the xy interaction, again as a form of invitation - Collective Rituals

the final sequence that builds on a circular pattern of the xy interaction, slower and slower - Optical

- Glitch

- Fine

Need to finish this analysis but for now here is a draft structure for the performance. Eventually will integrate it with the previous two sections: the Performance Structure and the trigger notes.

Structure?

I

Invitation and definition of the domain: touch interaction and public partecipation

- a. invitation

- extend the extents of the touchscreen

- create a shared consistence for the screen surface

- b. composition

- explore it as a poetic device

- II

????

III from partecipation to collective ritual

6-08

Two ideas for the performance:

a. Abstract Supply Chain

--> about the space where the performer dances

The space in the virtual environment resemble more an Abstract Supply Chain instead of an architectural space. It's an environment not made by walls, floor, and ceiling, but rather a landscape filled with objects and actors, the most peculiar one being the performer.

We can build a model that scan scales with the connection of new users. Something that has sense with 10 people connected as well as 50. Something like a fractal, that is legible at different scales and intensities.

Something between a map, a visualization, a constellation. Something that makes sense in a 3d environment and in a 2D screen or projection.

Lot of interesting input here: Remystifying supply chains

b. Object Oriented Live Action RolePlay (LARP)

--> about the role of the public

We have a poll of 3d object related to our theme: delivery packages, bike, delivery backpack, kiva robot, drone, minerals, rack, servers, gpu, container, etc. a proper bestiary of the zone.

Every user is assigned to an object at login. The object you are influences more or less also your behavior in the interaction. Im imagining it in a subtle way, more something related to situatedness than theatrical acting. An object oriented LARP.

How wide or specific our bestiary should be? A whole range of different object and consistency (mineral, vegetal, electronical, etc.) or just one kind of object (shipping parcels for example) explored in depth?

From here --> visual identity with 3D scan?

The Three Interactions

All the interactions are focused on the physical use of touchscreen. They are simple and intuitive gestures, that dialogue with the movements of the performer.

There are three section in the performance and one interaction for each. We start simple and gradually add something, in order to introduce slowly the mecanishm.

The three steps are:

- presence

- rythm

- space

Presence is the simple act of touching and keep pressing the screen. Ideally is an invite for the users to keep their finger on the screen the whole time. A way for the user to say: hello im here, im connected. For the first part of the performance the goal is to transform the smooth surface of the touchscreen in something more. A sensible interface, a physical connection with the performer, a shared space.

Rythm takes into account the temporality of the interaction. The touch and the release. It gives a little more of freedom to the users, without being too chaotic. This interaction is used to trigger events in the virtual environment such as the coming into the world of the object.

Space is the climax of the interaction and map the position on the touchscreen into the VR environment. It allows the user to move around in concert with the other participants and the performer. Here the plan is to take the unreasonable chaos of the crowd interacting and building something choreographic out of it, with the same approach of the collective ritual ending of the previous iteration.

Each section / interaction is developed in two parts:

-

an initial moment of invitation where the performer introduces the interaction and offer it to the user via something similar to the functioning of mirror neurons. Imagine the movement for St.Thomas as invitation to keep pressing the touchscreen.

It is a moment that introduces the interaction to the public in a practical way, instead of following a series of cold instruction. It is also a way to present the temporality and the rythm of the interaction.

-

a following moment of composition, in which the interactive mechanism is explored aesthetically. For Presence is the way the performer interact with the obejct inside the space. For Space is facilitating and leading the behaviour of the users from something chaotic to something organic (from random movements to a circular pattern?)

Tech Update

Started having a look at reactive programming. Since everything here is based on events and messages flowing between clients, server and vvvv, the stream approach of reactive programming makes sense to deal with the flows of data in an elegant way.

Starting from here: The introduction to Reactive Programming you've been missing

For notification and audio planning to use howler.js, probably with sound sprites to pack different sfx into one file. https://github.com/goldfire/howler.js

10/08 and 9/08 and 11/08

second interaction

how to call the Three Interactions? TI? 3I ? III I ? ok stop

it's usefull to imagine the lifecycle of the object to think about the three interactions.

1 presence___presence____being there

2 rythm______quality_____in a certain way

3 space______behaviour___and do things

So for what concerns the second interaction:

- could be related to the configuration of the object, a way to be more or less structured

- for example start from totally deconstructed object and gradually morph into it's normal state

- an assemblage of different parts

Following the timeline of the performance we could setup a flow of transformation for every object: at the beginning displacing randomly the object, messing around with its parts. We could gradually dampen the intensity of these transformations, reaching in the end a regular model of the object.

This transformations are not continous, but triggered by the tap of the user. They could be seen as snapshots or samples of the current level of transformation. In this way, either with high & low sample rate we can get a rich variation amount. This means that if we have a really concitated moment with a lot of interactions the transformations are rich as well, with a lot of movements and randomness. But the same remains true when the rythm of interaction is low and more calm: it only get the right amount of dynamic.

One aspect that worries me is that these transformation could feel totally random without any linearity or consistency. I found a solution to this issue by applying some kind of uniform transformation to the whole object, for example a slow, continous rotation. In this way the object feels like a single entity even when all its parts are scattered around randomly.

The transformation between the displaced and the regular states should take into account what I called incremental legibility, that is:

- progressively transform more feature (position, rotation, scale, texture, colors, etc)

- progressively decrease intensity of the transformations

in this way we could obtain some kind of convergence of the randomness.

Actually the prototype works fine just with the decreasing intensity, i didn't tried yet to transform the different features individually or in a certain order.

Also: displacing the textures doesn't look nice. It just feels broken and glitchy, not really an object.

for what concerns the display:

- in one screen we cluster all the objects in a plain view, something like a grid (really packed i presume? it depends on the amount)

- in the other we could keep them as they were in the first interaction, and present them through the point of view of the performer, keeping the sound notification when she gets closer and working as a close-up device.

we could also display the same thing in two screens, to lower the density of object and focus more on the relationship between the performer and the public as a whole, attuning the rythm

how this interaction interacts with the choreography? is it enough for the performer to be just a point of view?

the big practical recap

0. Intro

-

Interaction

- user logs in the website

- there is a brief introduction

- there are simple instructions: turn up volume

- he can either select a 3d object or it's given one randomly ???

-

Stage

- performer in idle mode, already inside the VR

- user connected trigger minimal movements, imperceptible

- references:

- isPerformerOnline? trigger

-

Two screens (loops)

- Object Oriented Choreography v3.0

- To partecipate in the perfomance connect to o-o-c.org

- QR code

-

Website

- Start page - brief overview + username + enter button

- Instruction + Waiting room

-

Sound

- first pad slowly fade in

-

TODOs

- Screens UI:

- title

- call to connect

- website url

- Website UI:

- Start page - brief overview + enter?

- Instructions

- Username input or alternate identifier

- Waiting room

- VR:

- New User notification

- Screens UI:

1. Presence

Presence is the simple act of touching and keep pressing the screen. Ideally is an invite for the users to keep their finger on the screen the whole time. A way for the user to say: hello im here, im connected. For the first part of the performance the goal is to transform the smooth surface of the touchscreen in something more. A sensible interface, a physical connection with the performer, a shared space.

-

Interaction

- activated by touch press.

- user needs to keep pressed in order to stay connected to the performer.

- when users press they appear in the virtual environment in the form of 3D object.

- The object is still, it's position in the space is defined as Abstract Supply Chain structure.

- When the performer gets close to users, a notification is sent to their smartphone and plays some sound effects.

- Every object has its own sprite of one-shot sounds bonus: the more the user keep pressed, the bigger the object grows? so it's activated more frequently and this could lead to some choir and multiple activations at the same time?

-

Stage

- the performer guides the public to mimic her at the beginning with the invitation

- the disposition of objects in space offers a structure for composition

- references:

- st. thomas, scribble, fingertips triggers (invitation)

- perimetro, area triggers (composition)

-

Two screens

- Direct feedback of interactions

- Representation of the space inside the VR? Structure of the ASC

- Position of the performer inside the VR

-

Website

- press interaction. users are invited to keep pressed the touchscreen.

- touch feedback

- audio feedback when performer get closer

-

Sound

- Audio track from PA

- Interaction sfx from the phones

-

TODOs

- Sounds for the objects

- Soundcheck to balance PA and smartphone volume

- 3D objects

- which objects?

- how many?

- how to provide variation?

- find & clean

- VR

- define the disposition in 3D environment --> abstract supply chain

- Screens:

- define how the object are displayed: how to get to the second interaction?

- Shall we see the object right from the start? Or they could be introduced later?

2. Rythm

Rythm takes into account the temporality of the interaction. The touch and the release. It gives a little more of freedom to the users, without being too chaotic. This interaction is used to trigger events in the virtual environment such as the coming into the world of the object.

- Interaction

- activated by tap

- tapping causes transformations in the object-avatar

- at the beginning the transformations are intense, the objects totally deconstructed

- the transformations get less and less strong toward the end of the section

- reconstruction of the object

-

Stage

- the performer intercepts the interactions of the public: try to influence rythm and intensity

- introduce the performer's point of view in the virtual environment

- references:

- tapping (invitation)

- logic & logistic, efficiency (stationary, high intensity)

- scroll, optical triggers (stationary, low intensity)

-

Two screens

- Cluster all the objects in a plain view, something like a grid (could be really packed?)

-

Keep objects where they were in the first interaction, POV performer working as a close-up device.

-

could also display the same thing in two screens, to lower the density of object and focus more on the relationship between the performer and the public as a whole, attuning the rythm

-

Website

- tap interaction. users are invited to tap onto the screen.

- visual instant feedback on phone

- audio feedback when performer gets close

-

Sound

- Audio track from PA

- Keep the sound notification from smartphone when she gets close

- Sound on smartphone could respond to tap when the performer gets close

-

TODOs

- Sounds effect

- 3D Objects import and optimization

3. Space

Space is the climax of the interaction and map the position on the touchscreen into the VR environment. It allows the user to move around in concert with the other participants and the performer. Here the plan is to take the unreasonable chaos of the crowd interacting and building something choreographic out of it, with the same approach of the collective ritual ending of the previous iteration.

- Interaction

- activated by touch drag

- the user interact with the touchscreen as a pointer

- user drag moves the object in the VR space

- after initial chaos both performer and directors suggest circular movement

-

Stage

- the performer and the users move in the same space

- the performer tries to intercept the movements of the public

- working with directions, speed and intensity

- references:

- invitation ???

- collective ritual trigger (composition) --> to outro

-

Two screens

- view is from top and is 1:1~ with smartphone screens

- could be either:

- the point of view of the performer

- following object

- static close-ups

-

Website

- xy interaction. users are invited to drag around the screen.

- visual instant feedback on phone?

- audio feedback when getting closer to the performer?

-

Sound

- Audio track from PA

- Keep the sound notification from smartphone?

-

TODOs

- Sounds: Better way to use notification?

4. Outro

-

Interaction

- part 3. slow down till it stops

-

Two screens

- everything black

- overlay fade in

- title

- credits

- participants' name ?

-

Website

- overlay fade in

- title

- credits

- participants' name ?

- initial overview

- overlay fade in

-

Stage

- performer gets out from VR

- thanks & goodbye

-

Sound

- Audio track from PA ends

- No more notifications from smartphone

-

TODOs

- credits system?

- UI screens

- UI website

12/08 vvvv app design

placeholders:

0.

- intro slides loop

- 2d text and graphics

- 3d objects (qr code for website?)

- vr UI notification for user connection

output manager a system where you can decide where to render: - screen 1 - screen 2 - both screens

13/08 and 14/08 and 15/08

Last Mile

For the objects we will focus on the Last Mile Logistics. The moment in which things shift from the global to the local, from an abstract warehouse to your doorstep. Last Mile Logistics is tentacolar, it is made of vectors that head toward you.

We asked to Nico for some suggestions for good quality 3D models and he replied with a list from Sketchfab. Thanks a lot.

Here the one we imported already:

- https://sketchfab.com/3d-models/warehouse-shelving-b261a6ff8d7243df9d20acaabc76aab0

- https://sketchfab.com/3d-models/platform-trolley-fcedc57affa34dba9870cefd9668e8fe

- https://sketchfab.com/3d-models/pallet-truck-0419ee072fa4427aaadc2afbcae2894d

- https://sketchfab.com/3d-models/hand-truck-f539455450ca40df8843a602aafbda91

- https://sketchfab.com/3d-models/set-of-cardboard-boxes-8986ba512f704ac5b253286a0d1ad8bb

- https://sketchfab.com/3d-models/plastic-crate-74d5ce81aacd4c9c82290d3f90635513

- https://sketchfab.com/3d-models/pallet-ad8768f522184364af70b56846d10fcf

Will need to credit the authors of the models:

"pallet truck" (https://skfb.ly/6UV88) by Kwon_Hyuk is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Platform Trolley" (https://skfb.ly/6RJto) by louis-muir is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Hand Truck" (https://skfb.ly/6VGAH) by HippoStance is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Warehouse Shelving" (https://skfb.ly/on6oy) by jimbogies is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Pallet" (https://skfb.ly/os7YC) by Marsy is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Plastic Crate" (https://skfb.ly/orQTM) by Virtua Con is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Set of Cardboard Boxes" (https://skfb.ly/onr6S) by NotAnotherApocalypticCo. is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"8" (https://skfb.ly/6Aovp) by Roberto is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Plastic Milk Crate Bundle" (https://skfb.ly/ov7Nn) by juice_over_alcohol is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Compostable Burger Box .::RAWscan::." (https://skfb.ly/onGUV) by Andrea Spognetta (Spogna) is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Barrel" (https://skfb.ly/6TInO) by Toxic_Aura is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Foodpanda Bag" (https://skfb.ly/6TUGF) by AliasHasim is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Japanese Road Signs (28 road signs and more)" (https://skfb.ly/o8WBK) by bobymonsuta is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Coffee Paper Bag 3D Scan" (https://skfb.ly/6W88p) by grafi is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Traffic cone (Game ready)" (https://skfb.ly/6SqHs) by PT34 is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Warning Panel" (https://skfb.ly/6BQJS) by Loïc is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"An Office Knife" (https://skfb.ly/SZKT) by runflyrun is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Tier scooter" (https://skfb.ly/opxDJ) by Niilo Poutanen is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Bike Version 01" (https://skfb.ly/6UHzZ) by Misam Ali Rizvi is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"CC0 - Bicycle Stand 4" (https://skfb.ly/ovLvI) by plaggy is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Wooden Crate" (https://skfb.ly/otvLA) by Erroratten is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"8-Inch GE Dr6 Traffic Signals" (https://skfb.ly/otwAu) by Signalrenders is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Power Plug /-Outlet /-Adapter | Connector Strip" (https://skfb.ly/ooUPN) by BlackCube is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Simple turnstile" (https://skfb.ly/o96tU) by LUMENE is licensed under Creative Commons Attribution-NonCommercial (http://creativecommons.org/licenses/by-nc/4.0/).

"Microwave Oven" (https://skfb.ly/6RrWD) by aqpetteri is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Camera" (https://skfb.ly/ooLVM) by Shedmon is licensed under Creative Commons Attribution (http://creativecommons.org/licenses/by/4.0/).

"Cutting pliers" (https://skfb.ly/otMQv) by 1-3D.com is licensed under Creative Commons Attribution-ShareAlike (http://creativecommons.org/licenses/by-sa/4.0/).

OO Graphics Dasein with Iulia

Iulia came for the weekend to work on the visual! We spent a full-immersion graphic design visual dasein weekend to glue everything together and the results are nice.

For the screens we decided on a physical UI, condensing everything into a 3D quad inserted into the scene with the other objects.

For the website we went for the same big square concept and used the same pallette. Total black and blue. Grazie Ragazzi & Forza Atalanta.

*

- Timelapse

TODO: img

Varia

- semantic versioning for the title? https://semver.org/

Aqua Planning

TODO:

-

load assets in a clever way (load once and then reuse?)

-

scenes

- intro:

- fix assets

- presence: draft

- link to users did it

- implement audio system

- rythm: draft

- link to users

- implement transformations

- how things are scattered around ???

- pov performer

- space:

- link to users

- implement instancing

- pov performer

- outro:

- object

- text on textures

- intro:

- organize scenes

-

timeline

-

web

- user interaction

- sound sprites ?

- custom domain

-

essay

-

server

- pickup behaviour

- !!! optimization !!!

-

scene manager:

- vvvv

- server

-

user <----> 3d models?

- how to instantiate

24/08/2022

Our video shooting is going to be on Sunday 28th. We will film a bit the reharsal with Sofia and say something about the project. Need to prepare something not to look to dumb or complicate.

Finally decided to approach the assets problem: how to load an incredible amount of 3d models and materials in the patch? The answer is: via the Stride Game Studio. I don't like it, but it works fine and give us less problem of import and loading, since every asset is being compiled and pre-loaded at the opening of the patch. Or something like that, im not super sure.

So these are the specifics to load things:

- Stride Game Studio 4.0.1.1428 (the same that vvvv is using, see in the About panel or in the dependencies)

- Model are imported in OBJ format to keep the mesh separated.

- Merge Meshes in the model panel should be checked, otherwise vvvv will crash.

- LoadProject node in vvvv and select the .csproj of the game.

Things can be organized in scenes, that could be an interesting way to deal with the different interaction moments. Let's see.

TODO:

- Text for sunday

- Some nice and funny desktop setup to show that we are working with technology 👩💻

- Import models and material in the stride project

- Refactor vvvv patch to work with the stride project

- Do we want to print the essay? On the AH maps?

25/08

Yesterday night loaded the first batch of objects into Stride. It required a bit of time to setup a proper workflow.

This morning refactored the patch to work with assets from the Stride Project. Now there is an Object node that takes the name of the model and return an entity with the various parts of the 3D object as children, with the right materials etc. In this way we can set individual transform to the elements and decompose the objects in pieces.

next for today:

-

new 3d objects : download - break - import DONE

OK SELECTA 3D Objects

- VLC Media Player

- Warning Panel

- Traffic Signals

- Cutting pliers

- Power Plug

- Microwave

-

Surveillance Cam

- Bike

-

refactor presence with assets first thing in the morning and then

- refactor rythm with assets (actually implement? dont remember if it's already there)

(it's still a bit clunky the way to apply the instancing transfrom to the single element of the 3d model but let's see) (maybe it's enough to implement it in a modular way so the two scenes work with different nodes?)

- maybe now:

- adjust bio & pic for website DONE

- think to the interview on sunday WIP

Francesco Luzzana is a digital media artist from Bergamo, Italy.

Francesco Luzzana (he/him) develops custom pieces of software that address digital complexity, often with visual and performative output. He likes collaborative projects, in order to face contemporary issues from multiple perspectives. His research aims to stress the borders of the digital landscape, inhabiting its contradictions and possibilities. He graduated in New Technologies at Brera Academy of Fine Arts and is currently studying at the master Experimental Publishing, Piet Zwart Institute.

and this will be the picture or maybe the nice one from carmen! should ask her!

Ok ok enough

now for the interview:

two way binding:

attuning to the choreography of objects moved by digital platforms to grasp their

modality

- an interactive performance of contemporary dance

- with a performer inside the VR

- connected with the public via smartphone

- that can transform the space in which she moves

contents

- - last mile logistics and the very body of supply chain - used as interface between our daily lifes and the accidental megastructure of digital platforms - object oriented onthology and object oriented programming

for the shooting:

- sofia POV in 3d space with a lot of objects and that's it

- a bit of vvvv (ws receiver and factory?) and a bit of vscode (ws server)

- smartphone interaction? not sure but could be useful

Also today I got a mail from Leslie 💌 and look at here: pzwiki.wdka.nl/mediadesign/Calendars

Mom im famous im in the PZI Wiki!

todo: send pic to mic

28/08 - 31/08 First reharsal with Sofia

timeline:

- intro loop

- website: username and confirm

- sofia enters and put the hmd

- transition fade out and music starts

- presence

- ambient light off 0

- point light off 0

- website: waiting room

- sofia faces the screen, back to the public

- slowly turns

- fingertips

- st thomas --> queue presence interaction

- website: presence button, sound notification

- point light on, text posi

- website: presence interaction

- ambient light on 1 slow transition --> from 7:30 ~ to 8:00 (.30 min) super ease in

- swap sock transition (--)--> )() --> from 8:20 ~ to 10:30 (2:00 min) ease-in circ ~ ease-in-out circ ???

>>> stop in the middle and then explode at 10:40 !!!! setup light shaft ?

- rythm

- website: rythm interaction 10:40

- 10:40 queue audio

- rythm interaction smartphone gradually to white

- 13:00 force all smartphones white, disable interaction

- 13:10 --> 14:10 transition to performer POV (1 min) - linear ease-out - point light on ambient light off directional light off

- 16:00 --> ambient light on .125 directional light on 1 transition

- 16:45 --> audio queue to transition

- 17:00 --> obj reconstruction fluido ~:40 min not interactive

- 17:40 --> transition to general view, linear, 1 min

- space

- website: space interaction 18:30

- 18:40 zoom out transition endless super slow 100 m away - 21:00

- website: text the zone is the zona as 20:50

The blurb & about object orientation

Object Oriented Choreography proposes a collaborative performance featuring a dancer wearing a virtual reality headset and the audience itself. At the beginning of the event, the public is invited to log on to o-o-c.org and directly transform the virtual environment in which the performer finds herself. The spectators are an integral part of the performance and contribute to the unfolding of the choreography.

The work offers an approach to technology as moment of mutual listening: who participate is not a simple user anymore, but someone who definitely inhabits and creates the technological environment in which the performance happens. The performer not only is connected with each one of the spectators, but acts also as a tramite to link them together. In this way the show re-enacts and explores one of the paradigm of our contemporary world: the Zone.

The Zone is an apparatus composed of people, objects, digital platforms, electromagnetic fields, scattered spaces, and rhythms. An accidental interlocking of logics and logistics, dynamics and rules that allow it to exist, to evolve, and eventually to disappear once the premises that made it possible come undone. The Zone could be an almost fully automated Amazon warehouse as well as the network of shared scooters scattered around the city. It could be a group of riders waiting for orders outside your favorite take-away, or a TikTok house and its followers.

The research that OOC develops is influenced by the logistic and infrastructural aspect that supports and constitutes this global apparatus. The title itself of the work orbits a gray zone between the theoretic context of Object Oriented Ontology and the development paradigm of Object Oriented Programming. Moving through these two poles the performance explores the Zone: both with the categories useful to interact with hyperobjects such as massive digital platforms, and across the different layers of the technological Stack, with a critical approach to software and its infrastructure. A choreography of multiple entities in continous development.

06/09

- catchup system

- safari audio seems ok

-

reconnect when socket state is closed

-

white

- rythm interaction

- move around

- switch pov to global sseems off

- ambient light 7:45

Recap 4 december

- Web:

- client: choose youur object, accessibility, i18n multi-lang, link to previous versions (longform is actually hosted at v2.o-o-c.org), about and better general info

- server: better users management for credits

- Projection:

- objects material improvement

- enhance background colors

- adjust lines opacity for interaction

- names for rythm interaction?

- better object positioning (no reshuffle user join or leave)

- Sound:

- sound design for smartphone, for presence and ending

- OST in v4 timeline?

- Space:

- light design, space setup & projection

- research on addressable led strip? are they cheap? can we use the next performance to test a small led setup?