ASCII(7) Linux Programmer's Manual ASCII(7)

N�NA�AM�ME�E

ascii - ASCII character set encoded in octal, decimal, and hexadecimal

D�DE�ES�SC�CR�RI�IP�PT�TI�IO�ON�N

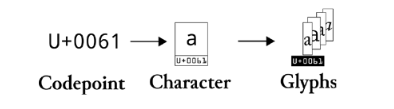

ASCII is the American Standard Code for Information Interchange. It is a 7-bit code. Many 8-bit codes (e.g., ISO 8859-1) contain ASCII as their lower half. The international

counterpart of ASCII is known as ISO 646-IRV.

The following table contains the 128 ASCII characters.

C program '\X' escapes are noted.

Oct Dec Hex Char Oct Dec Hex Char

────────────────────────────────────────────────────────────────────────

000 0 00 NUL '\0' (null character) 100 64 40 @

001 1 01 SOH (start of heading) 101 65 41 A

002 2 02 STX (start of text) 102 66 42 B

003 3 03 ETX (end of text) 103 67 43 C

004 4 04 EOT (end of transmission) 104 68 44 D

005 5 05 ENQ (enquiry) 105 69 45 E

006 6 06 ACK (acknowledge) 106 70 46 F

007 7 07 BEL '\a' (bell) 107 71 47 G

010 8 08 BS '\b' (backspace) 110 72 48 H

011 9 09 HT '\t' (horizontal tab) 111 73 49 I

012 10 0A LF '\n' (new line) 112 74 4A J

013 11 0B VT '\v' (vertical tab) 113 75 4B K

014 12 0C FF '\f' (form feed) 114 76 4C L

015 13 0D CR '\r' (carriage ret) 115 77 4D M

016 14 0E SO (shift out) 116 78 4E N

017 15 0F SI (shift in) 117 79 4F O

020 16 10 DLE (data link escape) 120 80 50 P

021 17 11 DC1 (device control 1) 121 81 51 Q

022 18 12 DC2 (device control 2) 122 82 52 R

023 19 13 DC3 (device control 3) 123 83 53 S

024 20 14 DC4 (device control 4) 124 84 54 T

025 21 15 NAK (negative ack.) 125 85 55 U

026 22 16 SYN (synchronous idle) 126 86 56 V

027 23 17 ETB (end of trans. blk) 127 87 57 W

030 24 18 CAN (cancel) 130 88 58 X

031 25 19 EM (end of medium) 131 89 59 Y

032 26 1A SUB (substitute) 132 90 5A Z

033 27 1B ESC (escape) 133 91 5B [

034 28 1C FS (file separator) 134 92 5C \ '\\'

035 29 1D GS (group separator) 135 93 5D ]

036 30 1E RS (record separator) 136 94 5E ^

037 31 1F US (unit separator) 137 95 5F _

040 32 20 SPACE 140 96 60 `

041 33 21 ! 141 97 61 a

042 34 22 " 142 98 62 b

043 35 23 # 143 99 63 c

044 36 24 $ 144 100 64 d

045 37 25 % 145 101 65 e

046 38 26 & 146 102 66 f

047 39 27 ' 147 103 67 g

050 40 28 ( 150 104 68 h

051 41 29 ) 151 105 69 i

052 42 2A * 152 106 6A j

053 43 2B + 153 107 6B k

054 44 2C , 154 108 6C l

055 45 2D - 155 109 6D m

056 46 2E . 156 110 6E n

057 47 2F / 157 111 6F o

060 48 30 0 160 112 70 p

061 49 31 1 161 113 71 q

062 50 32 2 162 114 72 r

063 51 33 3 163 115 73 s

064 52 34 4 164 116 74 t

065 53 35 5 165 117 75 u

066 54 36 6 166 118 76 v

067 55 37 7 167 119 77 w

070 56 38 8 170 120 78 x

071 57 39 9 171 121 79 y

072 58 3A : 172 122 7A z

073 59 3B ; 173 123 7B {

074 60 3C < 174 124 7C |

075 61 3D = 175 125 7D }

076 62 3E > 176 126 7E ~

077 63 3F ? 177 127 7F DEL

T�Ta�ab�bl�le�es�s

For convenience, below are more compact tables in hex and decimal.

2 3 4 5 6 7 30 40 50 60 70 80 90 100 110 120

------------- ---------------------------------

0: 0 @ P ` p 0: ( 2 < F P Z d n x

1: ! 1 A Q a q 1: ) 3 = G Q [ e o y

2: " 2 B R b r 2: * 4 > H R \ f p z

3: # 3 C S c s 3: ! + 5 ? I S ] g q {

4: $ 4 D T d t 4: " , 6 @ J T ^ h r |

5: % 5 E U e u 5: # - 7 A K U _ i s }

6: & 6 F V f v 6: $ . 8 B L V ` j t ~

7: ' 7 G W g w 7: % / 9 C M W a k u DEL

8: ( 8 H X h x 8: & 0 : D N X b l v

9: ) 9 I Y i y 9: ' 1 ; E O Y c m w

A: * : J Z j z

B: + ; K [ k {

C: , < L \ l |

D: - = M ] m }

E: . > N ^ n ~

F: / ? O _ o DEL

N�NO�OT�TE�ES�S

H�Hi�is�st�to�or�ry�y

An a�as�sc�ci�ii�i manual page appeared in Version 7 of AT&T UNIX.

On older terminals, the underscore code is displayed as a left arrow, called backarrow, the caret is displayed as an up-arrow and the vertical bar has a hole in the middle.

Uppercase and lowercase characters differ by just one bit and the ASCII character 2 differs from the double quote by just one bit, too. That made it much easier to encode char‐

acters mechanically or with a non-microcontroller-based electronic keyboard and that pairing was found on old teletypes.

The ASCII standard was published by the United States of America Standards Institute (USASI) in 1968.

S�SE�EE�E A�AL�LS�SO�O

c�ch�ha�ar�rs�se�et�ts�s(7), i�is�so�o_�_8�88�85�59�9-�-1�1(7), i�is�so�o_�_8�88�85�59�9-�-1�10�0(7), i�is�so�o_�_8�88�85�59�9-�-1�11�1(7), i�is�so�o_�_8�88�85�59�9-�-1�13�3(7), i�is�so�o_�_8�88�85�59�9-�-1�14�4(7), i�is�so�o_�_8�88�85�59�9-�-1�15�5(7), i�is�so�o_�_8�88�85�59�9-�-1�16�6(7), i�is�so�o_�_8�88�85�59�9-�-2�2(7), i�is�so�o_�_8�88�85�59�9-�-3�3(7), i�is�so�o_�_8�88�85�59�9-�-4�4(7),

i�is�so�o_�_8�88�85�59�9-�-5�5(7), i�is�so�o_�_8�88�85�59�9-�-6�6(7), i�is�so�o_�_8�88�85�59�9-�-7�7(7), i�is�so�o_�_8�88�85�59�9-�-8�8(7), i�is�so�o_�_8�88�85�59�9-�-9�9(7), u�ut�tf�f-�-8�8(7)

C�CO�OL�LO�OP�PH�HO�ON�N

This page is part of release 4.16 of the Linux _�m_�a_�n_�-_�p_�a_�g_�e_�s project. A description of the project, information about reporting bugs, and the latest version of this page, can be

found at https://www.kernel.org/doc/man-pages/.

Linux 2016-10-08 ASCII(7)