A (Proto) Cybernetic Explanation: Difference between revisions

No edit summary |

No edit summary |

||

| (47 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="quote-box"> | <div class="quote-box"> | ||

<p class="quote">Kenneth Craik: “''an essential quality of neural machinery is that it expresses the capacity to model external events''.” <ref>E. Craik, The Nature of Explanation (1943)</ref></p> | <p class="quote">Kenneth Craik: “''an essential quality of neural machinery is that it expresses the capacity to model external events''.”<ref>E. Craik, The Nature of Explanation (1943)</ref></p> | ||

<p class="quote">Kenneth Craik: “[...] ''our brains and minds are part of a continuous causal chain which includes the minds and brains of other men and it is senseless to stop short in tracing the springs of our ordinary, co-operative acts''.”<ref>K. Craik, ''The Nature of Explanation'' (1943)</ref></p> | <p class="quote">Kenneth Craik: “[...] ''our brains and minds are part of a continuous causal chain which includes the minds and brains of other men and it is senseless to stop short in tracing the springs of our ordinary, co-operative acts''.”<ref>K. Craik, ''The Nature of Explanation'' (1943)</ref></p> | ||

<p class="quote">Warren McCulloch: ''“In 1943, Kenneth Craik published his little book called ''The Nature of Explanation'', which I read five times before I realized why Einstein said it was a great book” […] “[Kenneth Craik’s] work has changed the course of British physiological psychology. It is close to cybernetics. Craik thought of our memory as a model of the world with us in it, which we update every tenth of a second for position, every two for velocity, and every three for acceleration as long as we are awake.”''<ref>Warren S. McCulloch, The Beginning of Cybernetics, Macy Conferences on Cybernetics, Vol. 2 p 345</ref></p> | <p class="quote">Warren McCulloch: ''“In 1943, Kenneth Craik published his little book called ''The Nature of Explanation'', which I read five times before I realized why Einstein said it was a great book” […] “[Kenneth Craik’s] work has changed the course of British physiological psychology. It is close to cybernetics. Craik thought of our memory as a model of the world with us in it, which we update every tenth of a second for position, every two for velocity, and every three for acceleration as long as we are awake.”''<ref>Warren S. McCulloch, The Beginning of Cybernetics, Macy Conferences on Cybernetics, Vol. 2 p 345</ref></p> | ||

| Line 7: | Line 7: | ||

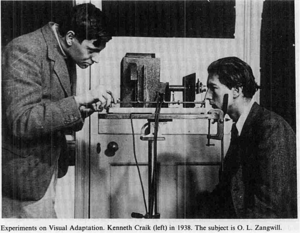

[[file:image_processingCraik.png|thumb|''Kenneth Craik at work'']] | [[file:image_processingCraik.png|thumb|''Kenneth Craik at work'']] | ||

Assessments of the cybernetic era often stress that the key members of the cybernetic movement were engaged in military research during World War II, and it was in that context that the relevance of feedback and servomechanisms became paramount.<ref>Geoff Bowker, How to be Universal: Some Cybernetic Strategies 1943-70 (1993); Peter Galison, The Ontology of the Enemy Norbert Wiener and the Cybernetic Vision (1994)</ref> It is undoubtedly the case that any mobile servomechanism can be trained to scan and attack a target – just as any cybernetic tortoise or maze running mechanical rat can be fitted with a gun or bomb. The degree to which the “cybernetic moment” was built on an “ontology of war” cannot be underestimated, however it is equally important to recognise that the builders of the cybernetic devices studied in this text were – before and after the war – physiological psychologists leading in the field of brain research.<ref>''The Cybernetic Brain, Sketches From Another Future,'' Andrew Pickering, University of Chicago press (2010)</ref> In this capacity they developed machines which were designed to read and affect the nervous system. The British cyberneticians Kenneth Craik, William Ross Ashby, and William Grey Walter were all physiological psychologists, convinced that machines could be built which model the mind and nervous system. Such machines could express mind at its most rudimentary level: as an organism which feeds information through its system and which affords adaptation to its immediate environment. | |||

The cybernetic moment might be seen, therefore, as a meeting of | The cybernetic moment might be seen, therefore, as a meeting of | ||

<p class="indent"> | <p class="indent"> | ||

a) the contingencies of war | a) the contingencies of war<br> | ||

b) the conception of mind as coextensive to the system it inhabits. | b) the conception of mind as coextensive to the system it inhabits. | ||

</p> | </p> | ||

It is | It is after WWII that these two discourses overlap and the principle of a weapon seeking a target is translated to the principle of an organism seeking purpose. This meeting point was articulated by Kenneth Craik in the early 1940s.<ref> The term "the cybernetic moment" has been useful in contemporary discourse, see: ''The Cybernetics Moment, Or Why We Call Our Age the Information Age,''Ronald R. Kline.| Note: I locate the beginnings of this "moment" at precisely this point where the discourses of WW II military research and physiological psychology come together to create a new "discourse network" of which McCulloch, Bateson, Kubie, and the British cyberneticians were a part. Here I use "discourse network" in the sense F. Kittler ''Discourse Network 1800-1900'' would use it, that moment when the means of storage and transmission of information undergo a transformation.)</ref> | ||

The implication arising from the premise that | The implication arising from the premise that an organism seeking purpose can be modelled by a machine, is that the discourse of the organism finds its equivalence in the discourse of the machine. In the previous chapters I argued that this conflation did not arrive with the moment of cybernetics, Samuel Butler had been amongst those who had recognised the implications of the advent of the “vapour engine”; Alfred Russel Wallis had observed that the operations of the self-regulating steam engine and natural selection were principally the same. Long before the advent of cybernetics, the experimental behaviourist Clark Hull, a builder of “thinking machines”, maintained: “it should be a matter of no great difficulty to construct parallel inanimate mechanisms […] which will genuinely manifest the qualities of intelligence, insight, and purpose, and which will insofar be truly psychic”.<ref>Clark Hull. Idea Books 1929-</ref> The premise of organism-machine equivalence was well established in research cultures of experimental psychology before cybernetics arrived on the scene. | ||

The group of three British physiological psychologist-cyberneticians, William Ross Ashby, Kenneth Craik and William | The group of three British physiological psychologist-cyberneticians, William Ross Ashby, Kenneth Craik, and William Grey Walter<ref>Kenneth Craik’s wartime research included researching combatants’ performance in a simulation cockpit..</ref> were all hands-on engineers, and all three were in the forefront of experimental brain research. As brain researchers, they sought to extend Pavlov’s conditioned reflex through experimentation with servomechanisms. Grey Walter and Craik both worked at the conditioned reflex laboratory at Cambridge, where Craik was based, and Craik was a frequent visitor to the Burden Neurological Institute, Bristol, where Grey Walter was the director. Here Craik collaborated with Grey Walter, using the EEG machine which Walter had developed to research the brain’s scanning function in relation to its production of particular brain waves (“alpha waves”). It was Craik who first suggested the relation between scanning and feedback to Grey Walter and encouraged the construction of a servomechanism which would combine the elements of scansion and feedback. This resulted in the Machina Specularix, or Cybernetic Tortoise (1947), which I will describe in some detail soon. | ||

In this chapter we will examine two texts by Craik: The unfinished The Mechanism of Human Action (Cc. 1943) | In this chapter we will examine two texts by Craik: The unfinished ''The Mechanism of Human Action'' (Cc. 1943) posthumously published in ''The Nature of Psychology''(1966), and ''The Nature of Explanation'' (1943). In ''The Mechanism of Human Action'' Craik outlines the conditions necessary to build a cybernetic creature which behaves like an animal, In the second text,''The Nature of Explanation'' Craik sets out his philosophical position, which (in line with Samuel Butler), understood mind as a function of matter (and not an entity outside of the material realm). | ||

[[file:CraikNature ofPsychology.jpg|thumb]] | [[file:CraikNature ofPsychology.jpg|240px|thumb]] | ||

==CONTEXT== | ==CRAIK'S CONTEXT== | ||

Kenneth Craik died in a cycling accident in 1945 at the age of 31 | Kenneth Craik died in a cycling accident in 1945 at the age of 31. A selection of Craik’s papers and essays ''The Nature of Psychology ''(1966) was published years after his death.<ref> Kenneth J. Craik, The Nature Of Psychology, a Selection of Papers, Essays and Other Writings, Stephen L.Sherwood ed. 1966, with Introduction by Warren McCulloch, Leo Verbeek and Stehen L. Sherwood.</ref> His philosophical work ''The Nature of Explanation'' (1943) was however published in his lifetime, as was his influential paper ''Theory of the Human Operator in Control Systems'' (1943). Craik's work anticipated many of the key ideas in relation to negative feedback and purpose which would later be discussed in Norbert Wiener’s ''Cybernetics'' and in the Macy conferences on cybernetics in New York (1946-1953). | ||

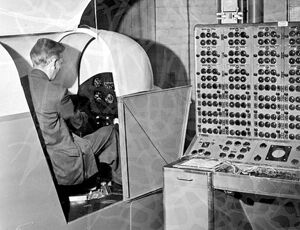

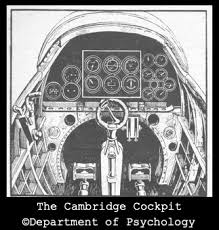

[[File:SimulationCockpi.jpg|thumb|Simulation Cockpit, Cambridge Human Cognition and Brain Science Unit]] | [[File:SimulationCockpi.jpg|thumb|Simulation Cockpit, Cambridge Human Cognition and Brain Science Unit]] | ||

In 1947, following a visit to the Burden Neurological Institute by Norbert Wiener, Gray Walter noted: “We had a visit yesterday from a Professor Wiener, from Boston. I met him over there last winter and found his views somewhat difficult to absorb, but he represents quite a large group in the States… These people are thinking on very much the same lines as Kenneth Craik did, but with much less sparkle and humour.”<ref> cite</ref> | In 1947, following a visit to the Burden Neurological Institute by Norbert Wiener, Gray Walter noted: | ||

<p class="body-quote"> | |||

“We had a visit yesterday from a Professor Wiener, from Boston. I met him over there last winter and found his views somewhat difficult to absorb, but he represents quite a large group in the States… These people are thinking on very much the same lines as Kenneth Craik did, but with much less sparkle and humour.”<ref> cite</ref> </p> | |||

Despite the differences in style identified by Gray Walter, there is remarkable similarity between Wiener and Craik’s respective approaches. Both derived their work on feedback systems from their war-time work on anti-aircraft predictors and both extended their war work to find application in universal principles of organisation, which is why Craik has been referred to as “the English Norbert Wiener”.<ref>A. Pickering ''The Cybernetic Brain''</ref> | Despite the differences in style identified by Gray Walter, there is remarkable similarity between Wiener and Craik’s respective approaches. Both derived their work on feedback systems from their war-time work on anti-aircraft predictors and both extended their war work to find application in universal principles of organisation, which is why Craik has been referred to as “the English Norbert Wiener”.<ref>A. Pickering ''The Cybernetic Brain''</ref> | ||

During WWII Craik had built and designed a simulation cockpit, in which he tested the pilots’ ability to function; their adaptation to different light conditions and the effect of fatigue on mental acuity (Craik's main study was the psychology of visual perception). From this work he concluded that the pilot in the cockpit works in a similar way to a servo-machine. Just as a servo is able to correct "misalignment" through cyclical action, so does the human being. <ref>Craik obituary</ref> Like Wiener, Craik was able to extend these principles into a more speculative, philosophical realm. | During WWII Craik had built and designed a simulation cockpit, in which he tested the pilots’ ability to function; their adaptation to different light conditions and the effect of fatigue on mental acuity (Craik's main study was the psychology of visual perception). From this work he concluded that the pilot in the cockpit works in a similar way to a servo-machine. Just as a servo is able to correct "misalignment" through cyclical action, so does the human being.<ref>Craik obituary</ref> Like Wiener, Craik was able to extend these principles into a more speculative, philosophical realm. | ||

[[File:Craik SimulationCockpit.jpeg|thumb|Craik's Simulation Cockpit]] | [[File:Craik SimulationCockpit.jpeg|thumb|Craik's Simulation Cockpit]] | ||

In ''The Nature of Explanation'' Craik argues for a hylozoist conception of mind and consciousness, which is to say that there is no distinction between mind and matter. To underline the interconnectedness between mind and matter (hylozoism), Craik argues for a particular conception of causation and for a particular function for the model as a form of imminent analogy. Central to Craik’s argument is that model making is constitutive of mind, that an essential quality of neural machinery is that it expresses the capacity to model external events. <ref>Craik ''The Nature of Explanation'' (1943)</ref> | In ''The Nature of Explanation'' Craik argues for a hylozoist conception of mind and consciousness, which is to say that there is no distinction between mind and matter. To underline the interconnectedness between mind and matter (hylozoism), Craik argues for a particular conception of causation and for a particular function for the model as a form of imminent analogy. Central to Craik’s argument is that model making is constitutive of mind, that an essential quality of neural machinery is that it expresses the capacity to model external events.<ref>Craik ''The Nature of Explanation'' (1943)</ref> I will investigate ''The Nature of Explanation'' in greater detail later in this chapter, but first I'll take time to examine ''The Mechanism of Human Action'', which provides a blueprint, or instruction manual, for the cybernetic menagerie that will be built after Craik's death. | ||

==THE MECHANISMS OF HUMAN ACTION== | ==THE MECHANISMS OF HUMAN ACTION== | ||

In The Mechanism of Human Action, Craik outlines the conditions needed to build a cybernetic creature which models the attributes of a living organism. Such a machine, Craik made clear, requires input to cycle in long and short feedback loops. This | In ''The Mechanism of Human Action'', Craik outlines the conditions needed to build a cybernetic creature which models the attributes of a living organism. Such a machine, Craik made clear, requires input to cycle in long and short feedback loops. This balance of “qualitative” and “quantitive” feedback responses regulate the system. The machine, as it cycles feedback, is trying to “correct a misalignment” and with each circuit get closer to the goal; which means that with each circuit the probability of success increases. Craik takes the reader through different scales in which the feedback mechanism operates, first establishing the continuity between biological regulation and nervous regulation and providing a precise definition of how a biological feedback system can be transferred to feedback machines. Craik outlines how each level of homeostasis is embedded in the other, establishing levels of abstraction which run from basic regulation; to nervous regulation; to learned response.<ref>K. Craik''The Nature of Psychology''; ''The Mechanism of Human Action'' (Cc.1943)</ref> At a fundamental level, the circular, cumulative, action of the machine-organism corresponded to the on-off function of a neurone, which McCulloch & Pitts theorise in their paper ''A Logical Calculus of the Ideas Immanent in Nervous Activity''(1943),<ref>A Logical Calculus of the Ideas Immanent in Nervous Activity by Warren McCulloch and Warren Pitts (1943) </ref> in which they argue that this action is essentially probabilistic because a selection is made on the basis of the previous action. | ||

As Craik | As Craik looks forward to the cybernetic future, he also surveys the past. | ||

In The Mechanism of Human Action, Craik argues the issue of design and purpose in similar terms to Samuel Butler in ''Evolution Old and New'' (1879).<ref>Samuel Butler ''Evolution Old and New'' (1879)</ref> Craik constructs his argument with similar tools to Butler: Evolution cannot be regulated by chance ''or'' by purposeful design (Craik, like Butler, favours specific diversity). Both recognise the structural equivalence between feedback machines and organisms. Both | In ''The Mechanism of Human Action'', Craik argues the issue of design and purpose in similar terms to Samuel Butler in ''Evolution Old and New'' (1879).<ref>Samuel Butler ''Evolution Old and New'' (1879)</ref> Craik constructs his argument with similar tools to Butler: Evolution cannot be regulated by chance ''or'' by purposeful design (Craik, like Butler, favours specific diversity). Both recognise the structural equivalence between feedback machines and organisms. Both recognise that the machine with feedback follows a different teleological logic to the machine without. Therefore, a redefinition of purpose is required. Craik, like Butler, recognises that homeostasis in environment and organism are homological (they are structurally similar at different scales); an organism, an ecology, and a feedback system have the same structural principle, even if they do not resemble each other. It follows that it is possible to build a machine that has little physical resemblance to the brain or organism it models, and yet still embody the same structural principle. We note here that cybernetic creatures soon to be built – such as Grey Walter's tortoise and Ross Ashby's homeostat – bear little resemblance to the systems they modelled. Indeed, the cybernetic creatures embodied a set of principles that could be seen in a number of systems at different scales (from an organism to an environment). | ||

In The Mechanism of Human Action, Craik sets out a road map for the cyberneticians who would shortly follow him. Although the terminology would differ – “dynamic equilibrium” was Craik’s preferred term over the more precise “homeostasis”; “cyclical action” would be replaced by “cybernetics” – all the elements are in Craik’s writings to describe an epistemology that Wiener, McCulloch and Bateson would recognise. | In ''The Mechanism of Human Action'', Craik sets out a road map for the cyberneticians who would shortly follow him. Although the terminology would differ – “dynamic equilibrium” was Craik’s preferred term over the more precise “homeostasis”; “cyclical action” would be replaced by “cybernetics” – all the elements are in Craik’s writings to describe an epistemology that Wiener, McCulloch, and Bateson would recognise. | ||

In The Mechanism of Human Action (Cc.1943) Craik first sites | In ''The Mechanism of Human Action'' (Cc.1943) Craik first sites “dynamic equilibrium”<ref> Craik makes reference to Herbert Spencer and Pavlov in respect to the term "dynamic equilibrium"</ref> as the regulator of both servomechanism and organisms. Here, the “stable state” is regulated by the use of external energy, which is drawn on to correct any instability;<ref>''The Nature of Psychology''p.13</ref> Craik characterises this expenditure of energy as “down hill”. | ||

Craik: “Living organisms were, with few exceptions, the first devices to use a downhill reaction – such as the combustion of carbohydrates – to provide them with a store of energy by which to drive a few uphill reactions for their own benefit. This does not mean, of course, that the living organisms live contrary to the second law of thermodynamics and can prevent the gradual degeneration of energy; it merely means that they have means of storing external energy in potential form for driving some local uphill reaction. If we consider the whole picture, the reaction is, as far as we know always downhill on average; but some parts of it may go uphill by virtue of the energy derived from other parts.” | <p class="body-quote"> | ||

Craik: “Living organisms were, with few exceptions, the first devices to use a downhill reaction – such as the combustion of carbohydrates – to provide them with a store of energy by which to drive a few uphill reactions for their own benefit. This does not mean, of course, that the living organisms live contrary to the second law of thermodynamics and can prevent the gradual degeneration of energy; it merely means that they have means of storing external energy in potential form for driving some local uphill reaction. If we consider the whole picture, the reaction is, as far as we know always downhill on average; but some parts of it may go uphill by virtue of the energy derived from other parts.”<ref>K.Craik, The Mechanism of Human Action, ''The Nature of Psychology'' (1943) p14; note: Here Craik is speaking within the discourse of “dynamic equilibrium” – which, in itself, does not necessitate homeostasis, although homeostasis in a system is an expression of equilibrium. Craik also uses negative feedback as the regulator of the mechanism-organanism.</ref></p> | |||

The basic, down to earth, principles for Craik’s mechanism-organism are: | The basic, down to earth, principles for Craik’s mechanism-organism are: | ||

# The organism or servomechanism stores energy taken from the outside | # The organism or servomechanism stores energy taken from the outside – this can be food converted to glucose and carried through the system of an organism, or the energy stored in the battery of the servomechanism. | ||

# the controlled liberation of energy (which takes advantage of | # the controlled liberation of energy (which takes advantage of “downhill” when expending energy). | ||

To approach a “common sense” definition of life– requires: | To approach a “common sense” definition of life– requires: | ||

<p class="indent"> | <p class="indent"> | ||

(a) a sensory device for detecting and countering | (a) a sensory device for detecting and countering disturbance,<br> | ||

(b) a computing device for determining the right kind of response,<br> | (b) a computing device for determining the right kind of response,<br> | ||

(c) an effector or motor mechanism for making the response <ref> Craik ''The Nature of Psychology'' (1943) Cambridge p14</ref> | (c) an effector or motor mechanism for making the response.<ref>Craik ''The Nature of Psychology'' (1943) Cambridge p14</ref> | ||

</p> | </p> | ||

Craik next considers the role of negative feedback in the organisation of his machine-organism's behaviour and proposes his own adaptive model. His theoretical machine is simply a rod attached to a table which would resist any attempt to push it over. It would contain a | Craik next considers the role of negative feedback in the organisation of his machine-organism's behaviour and proposes his own adaptive model. His theoretical machine is simply a rod attached to a table which would resist any attempt to push it over. It would contain a “sensory element” such as a pendulum which would be actuated when the rod is pushed from the vertical. The misalignment controls the motor, “which runs in the appropriate direction until the misalignment has decreased to zero.” <ref>Kenneth Craik''The Nature of Psychology''p.16</ref> Craik's hypothetical model demonstrates how negative feedback establishes stability in the organism and machine <ref>''The Nature of Psychology''p.15-16</ref> and that this is in common with any “automatic regulating or stability restoring machine” <ref> p16</ref>. Craik goes on to extend this into the biological realm. From a biological point of view negative feedback implies “that it is modifying its behaviour (that is the motion of its motor) as a result of its own previous behaviour ; if that behaviour has restored equilibrium, and thus has been successful, the machine will stop, otherwise it will go on trying.”<ref>K. Craik''The Nature of Psychology''p.16 Note: If Craik's model is meant to do as little as possible, to simply establish its ability to restore itself to maximum degree of stability (inaction), it is very similar in principle to Ross Ashby's Homeostat. See Steps to a (media) Ecology. Craik's rod is also an illustration of Lacan's reading of the death drive in Freud's ''Beyond the Pleasure Principle''- see The Tortoise and Homeostasis</ref> | ||

So far Craik's machine is not capable of | So far Craik's machine is not capable of “spontaneous” activity - for this to happen positive feedback would have to come into play. | ||

Craik introduces the figure of the maze running rat, who is encouraged by negative feedback to avoid the electrified walls of its maze and is forced forward by positive feedback.Craik suggests such exploratory behaviour may be cumulative. Here Craik links positive feedback with a principle of pleasure, which will direct us toward the cybernetic creatures of the future – Grey Walter's Tortoise and Ross Ashby's Homeostat, for example – and to Lacan's fascination with machines which are capable of homeostasis. Craik goes on: " | Craik introduces the figure of the maze running rat, who is encouraged by negative feedback to avoid the electrified walls of its maze and is forced forward by positive feedback. Craik suggests such exploratory behaviour may be cumulative. Here Craik links positive feedback with a principle of pleasure, which will direct us toward the cybernetic creatures of the future – Grey Walter's Tortoise and Ross Ashby's Homeostat, for example – and to Lacan's fascination with machines which are capable of homeostasis. Craik goes on: | ||

<p class="body-quote"> | |||

“Thus we may suggest that successful and pleasant activities tend, in some way to exert positive feedback and to be cumulative; and although, of course, we do not know where the function of the pleasure easier enters we could make a machine with positive feedback which would continue to perform and increase any activity, by whatever slight random cause it had been initiated, provided it did not endanger the stability and equilibrium of the machine; this would give more appearance of spontaneity.”<ref>Craik The Mechanisms of Human Action in ''The Nature of Psychology'' (1943)p 17</ref></p> | |||

Craik goes on to explain how a future machine could be regulated by long and short feedback loops, engendering a type of mechanical learning which is analogous to | Craik goes on to explain how a future machine could be regulated by long and short feedback loops, engendering a type of mechanical learning which is analogous to “response modification” or “selective learning”. Craik distinguishes these forms of learning from “sign”learning, which he regarded as the highest form of conditioning. In sign learning “one stimulus comes to suggest the probable presence of another”.<ref>Craik, The Mechanisms of Human Action in ''The Nature of Psychology'' (1943)p18</ref> The most famous of these stimulus response reactions are Pavlov's dogs, which salivated at the sound of a bell which they associated with food. I will outline in the next chapter that it was precisely this response that Grey Walter was trying to engineer in his CORA (COnditioned Reflex Analogue), an adapted version of his cybernetic tortoise which Grey Walter demonstrated at the Paris Cybernetic Congress of 1951. | ||

To assist the conceptualisation of a learning machine Craik draws on the American behaviourist Clark Hull’s “conditioned reflex models”. Clark Hull had compiled a series of “idea books” (1929-52) in which he devised mechanical and electro-chemical automata which worked on the Pavlovian principle of trial and error conditioning (Pavlov’s Conditioned Reflexes was published in English translation in 1927). Hull also derived his own mathematical system to describe the operations of these automata and wanted to apply the rules of the conditioned reflex as computational units within his machines. Hull aimed to build a conditioned reflex machine that would demonstrate intelligence through contact with its environment. The devices were subjected to a “combination of excitory and inhibitory procedures and would exhibit an array of known conditioning phenomena”. Meaning, the machines would “learn” to overcome obstacles in order to achieve a particular goal. Hull methodically analysed how instances of purpose and insight could be derived from the simple interaction of elementarily conditioned habits. “I feel” stated Hull, “that all forms of action, including the highest forms of intelligent and reflective action and thought, can be handled from purely materialistic and mechanistic standpoints.”<ref> Hull in David E. Leary, ''Metaphors in the History of Psychology'', Cambridge, 1990</ref> | |||

<p class="floop-link" id="CLARK_HULL">[[CLARK HULL]]</p> | |||

Craik points out that machines are able to show more adaptability and “purposiveness” than one is apt to consider possible “from the study of, gramophones, clocks, automatic lathes” which lack feedback.<ref> Craik The Mechanisms of Human Action in ''The Nature of Psychology'' (1943)p20</ref> He outlines that more will be learned through continued experimentation and Craik sites two experiments that show the way forward, one by Brader (1937) and the second by Clark Hull (1943). | |||

[[File:T-Maze.jpg|thumb|''A T-Maze'']] | |||

In Bradner's model a device learns to run to the shorter arm of a T-maze provided it is replaced at the start of the maze immediately after each attempt. Failure to place the machine at the start immediately gives the creature time to “forget” as its response is effected by thermal resistance. Craik describes this as a form of selection: “what really happens is that the machine is arranged to forget, and is given a long time to forget its unsuccessful efforts and very little time to remember its more successful efforts”. Bradner's experiments do demonstrate a feedback response, but the repeated placing and replacing of the device breaks any “biological” continuity. However, the T-maze device does affirm Craik's instinct that a cumulative information circuit may hold the key to a future behaving and thinking machine. The same is true of Clark Hull's experiment. In ''Principles of Behaviour''<ref>C. L. Hull ''Principles of Behaviour'' (1943), New York: Appelton-Century-Crofts, p 27</ref> Hull gives an account of a conditioned reflex model in which the pressing of a morse key (stimulus) evokes a flashing light (response) which, because of frequent use, “high resistance” is reduced by use due to the frequent passage of current through the circuit. | |||

[[ | While Kenneth Craik would not live to see the affirmation of his theories, that adaptation is afforded by long and short feedback loops in a circuit, or that the combination of principles of experimental psychology and WWII research into predictive servo-mechanisms would be married to produce the new discipline of cybernetics, his work and writing presented models for his contemporaries to follow, principally Ross Ashby and Grey Walter, whose Homeostat and Cybernetic Tortoise would build on his legacy. | ||

[[File:Craik.jpg|thumb]] | |||

==THE NATURE OF EXPLANATION== | ==THE NATURE OF EXPLANATION== | ||

In his philosophical work | In his philosophical work ''The Nature of Explanation'' (1943) Craik argues for an anti-cartesian, non-vitalistic hylozoism – which is to say that mind is a function of matter, rather than an essence opposing matter. It includes the chapter ''Hypothesis on the Nature of Thought''. The chapter bears a striking resemblance to Samual Butler's argumentation in ''Evolution Old and New'', in that both Butler and Craik hold the model as centrally important to the evolution and processes of thought. Both agree that the human ability to model dispenses with the need to go through many redundant generations before reaching the optimum design (as does the evolutionary process). Craik points out that the ship, the Queen Mary, is designed with a model in a tank, it would be folly to produce many generations of unseaworthy vessels before arriving at the one that is able to safely cross the Atlantic. But more importantly, a model might have a ''relation structure'' to the thing it models, which means it does not resemble the thing it models but parallels its behaviour which is translated into the terms of the original object. Craik gives the example of Kelvin's tide predictor, which bears no physical resemblance to the tides it predicts. The tide predictor is a series of pulleys which reproduce the oscillations at frequencies which resemble the variation in tide level at a give spot in essential respects.<ref> Craik, ''Nature of Explanation'' p52</ref> | ||

Craik takes this idea of | Craik takes this idea of “relation structure” and applies it to Clark Hull's stimulus-response experiments, which bear a relation structure in essential respects to the things it models (in this case synaptic response to stimulus, the capacity to remember).<ref>Craik describes the nervous system as organised stochastically, the signals ‘take the path of least resistance’. This process works like the telephone exchange, says Craik, he also likens the human brain to a computer (the model being Douglas Hartree’s differential analyser (1934)). These are analogies but they are physical working models which “work in the same way as the process it parallels” the machine does not have to physically resemble the thing it parallels “it works in the same way in certain essential respects” P 53</ref> | ||

Craik points out that we must adopt a very specific understanding of | Craik points out that we must adopt a very specific understanding of “analogy” when we make these parallels between the physical world and the devices that model it. “[T]he telephone exchange may resemble the nervous system in just the way I find important; but the central point is the principle underlining the similarity”.<ref> Craik, ''Nature of Explanation'', p53</ref> | ||

One could inventory the countless instances of when a model is not like the thing it parallels, what is significant is the principle which underlines the similarities; which is their tendency to organise stimulus into symbolic order. Why, Craik asks, does this tendency to make analogy actually exist? Humans have a tendency to see simple rules (relation structures) operating within complex systems because this reflects the structure of reality. Indeed, Craik goes | One could inventory the countless instances of when a model is not like the thing it parallels, what is significant is the principle which underlines the similarities; which is their tendency to organise stimulus into symbolic order. Why, Craik asks, does this tendency to make analogy actually exist? Humans have a tendency to see simple rules (relation structures) operating within complex systems because this reflects the structure of reality. Indeed, Craik goes on to suggest, we might consider that our brain models the world we inhabit because our brain parallels the systems that bring it into consciousness.<ref>Craik, ''The Nature of Explanation'' (1943) Cambridge p55</ref> “The emergence of common principles and similarities is not so surprising if it is shown that all substance is composed of similar ultimate units...”<ref>Craik, ''The Nature of Explanation'' (1943) Cambridge, p54</ref> | ||

For Craik, “Significance”, (signification) is an essential element of human experience. Significance is the relatedness of things. For Craik, “all propositions carry as it were the right to apply to something objectively real” . | For Craik, “Significance”, (signification) is an essential element of human experience. Significance is the relatedness of things. For Craik, “all propositions carry as it were the right to apply to something objectively real”.<ref>Craik, ''The Nature of Explanation'' (1943) Cambridge, 94</ref> Once one accepts the possibility of symbolism, one must accept that symbols can represent alternatives, which experiment decides between. Thus, experiment becomes the arbiter of what is the case. In this way justification of causality must be its trial by experiment.<ref>Craik ''The Nature of Explanation'' (1943) Cambridge, 46</ref> Craik draws of the experimental hypothesis of Hermholtz, and supports the idea of “causal interaction in nature”. Admittedly, one cannot trace causation back to a first cause, but this is not a sufficient reason to doubt cause as such (as did Berkley and Hume). The model works in the same way as the thing it parallels, and in so doing it goes through the three phases of: ''translation, inference'', and ''re-translation''. | ||

# translation of external process into symbols | # translation of external process into symbols | ||

| Line 99: | Line 105: | ||

# re-translation into external process (such as building and predicting). | # re-translation into external process (such as building and predicting). | ||

Some machines demonstrate this triadic ability, such as anti-aircraft | Some machines demonstrate this triadic ability, such as anti-aircraft predictors and calculating machines. Craik again cites Clark Hull’s models, which respond to altered systems; from here Craik posits that an essential quality of neural machinery is that it expresses the capacity to model external events. The principle underlining the similarity of these machines is more important than superficial analogy. The brain works like a telephone exchange, sure, but the brain’s similarity to the telephone’s switching mechanism is one way to understand the operations of mind. The structural principle underlining the similarities is Craik’s triad of translation, inference and re-translation.<ref>.Craik ''The Nature of Explanation'' (1943) Cambridge p51</ref> | ||

Craik applies this triad to brain function as | |||

# the translation of external events into neural patterns through the stimulation of the sense organs | # the translation of external events into neural patterns through the stimulation of the sense organs | ||

| Line 107: | Line 115: | ||

Craik notes that to signify – to make a model in your head of something which could be actualized – has a close relation to entropy because signification involves the conservation of energy – it adds to the store of “down hill” actions. For Craik his own scheme “[…] would be a hylozoist rather than a materialistic scheme. It would attribute consciousness and conscious organisation to matter whenever it is physically organised in certain ways.” The machines Craik cites are an expression of mind, and expression of an ordering structure. | Craik notes that to signify – to make a model in your head of something which could be actualized – has a close relation to entropy because signification involves the conservation of energy – it adds to the store of “down hill” actions. For Craik his own scheme “[…] would be a hylozoist rather than a materialistic scheme. It would attribute consciousness and conscious organisation to matter whenever it is physically organised in certain ways.” The machines Craik cites are an expression of mind, and expression of an ordering structure. | ||

" | <p class="body-quote"> | ||

“My hypothesis then is that thought models, or parallels, reality – that its essential feature is not 'the mind', 'the self','sense-data', nor propositions but symbolism, and that this symbolism is largely of the same kind as that which is familiar to us in mechanical devices which aid thought and calculation.”<ref>Kenneth Craik''The Nature of Explanation'' p57</ref></p> | |||

Craik's notion of the model involves a very specific reading of the function of metaphor. Things, in their coming into being, mime the structures that run through reality. This is not to say that reality is an inert exterior object which is given significance (code is not written into or onto reality), but rather reality is emergent within the process of signifying itself. One makes a distinction and the difference establishes a relation. Thought, in the action of modelling, does not copy reality because “our internal model of reality can predict events which have nor occurred”. This predictive ability “saves time, expense and even life”<ref>Craik ''The Nature of Explanation'', 82</ref> the ability to predict is “down hill” all the way – it is negentropic. It begins with the functions of homeostasis in the organism and extends to conscious purpose – this is the position adopted by British cyberneticians and endorsed by Gregory Bateson and Warren McCulloch. | |||

The modelling of reality is part of a larger system, which introspective psychology and analytical philosophy have been unable to address. The degree of organisation which goes on at a non-conscious level is however evidenced in neuropathological experiments, in which purposive activity is highlighted. We generally pass over such activity as natural because greater mechanical complexity often leads to greater simplicity and coordination of performance, introspective psychology and analytical philosophy pays little attention to them as constitutive of thought (in Negative Entropy – Bateson - Freud I will discuss how Gregory Bateson and Warren McCulloch’s cybernetic critique of Freudianism was established on this premise). | |||

Craik | For Craik the processes of reasoning are fundamentally no different from the mechanisms of physical nature itself. Neural mechanisms parallel the behaviour and interaction of physical objects. Such processes are suited to imitating the objective reality they are part of, in order to provide information which is not directly observable to the organism (to predict and to model).<ref>Craik ''The Nature of Explanation'' p99</ref> | ||

The | Craik compares the performance of an aeroplane with a pile of stones. The aeroplane is more complicated but its performance is more unified. If the parts of the aeroplane had been dropped into a bucket the atomic complexity would be high but the simplicity of performance would be nil.<ref>Craik ''The Nature of Explanation'' 84-85</ref> Once the plane is built, however, there is an atomic and relational complexity which increases the possibilities of performance. | ||

The mind, in modelling reality, takes clues in perception – there is no obligation to decide whether such clues are conscious interpretations or automatic responses to reality which express atomic and relational complexity. For Craik “all perceptual and thinking processes are continuous with the workings of the external world and of the nervous system.” and there is no hard and fast line between involuntary actions and conscious thought. Craik now approaches a position close to Bateson’s, stressing the “continuity of man and his environment” […] “...our brains and minds are part of a continuous causal chain which includes the minds and brains of other men, and it is senseless to stop short in tracing the springs of our ordinary, co-operative acts”. Again Craik stresses that “man is part of a causally connected universe and his actions are part of the continuous interaction taking place.”<ref>Craik ''The Nature of Explanation'' 87-88 NOTE: Craik’s wartime research included researching combatants’ performance in a cockpit and night vision.</ref> | |||

The process of experimentation, and the operations of servo-mechanisms, are important for this generation of physiological-psychologist-cyberneticians because experimentation serves as the arbitrator of the model proposed by the processes of mind, and also because servomechanisms operate at the point where significance emerges. They provide models for a future stage of development, the conscious machine. This is the future that Samuel Butler had modeled from the vapour engine and the future that Grey Walter would go on to model in the cybernetic tortoise. | |||

Experimentation with servomechanisms is, for Craik, Ashby, and Grey Walter, an expression of the more complex structures that underly them. In this respect Ross Ashby’s homeostat and Grey Walter’s tortoise are a result of ''the discourse of Kenneth Craik'' and of a particularly experimental, hylozoist approach to neuroscience and behaviour. This involved rigorous mathematical and philosophical theorisation alongside the construction of devices in the metal, alongside the analysis of what these machines express. If these creatures think, one might ask, how do they think? Perhaps, the machines themselves suggest – in their unconscious, indifferent relation to us, in their blind insistence on passing through our space, making demands on our matter – that we don’t actually think in the way we think we think.<ref>Craik, IX-XI Note: This experimental epistemology is in line with the work of Warren McCulloch – who co-wrote the introduction to the belated publication of a selection of Craik’s papers and essays in 1966</ref> | |||

Kenneth Craik left the path open for his colleagues Ross Ashby and Gray Walter to build actual performative models which fundamentally challenged what we think thinking is. | |||

<div class="chapter-links"> | |||

<div class="previous-chapter">[[Vibrations|Vibrations ←]]</div> | |||

<div class="next-chapter">[[From Schizmogenesis to Feedback|→ From Schizmogenesis to Feedback]]</div> | |||

</div> | |||

[[Category:Fabulous_Loop_de_Loop Chapters]] | |||

Latest revision as of 10:26, 10 March 2022

Kenneth Craik: “an essential quality of neural machinery is that it expresses the capacity to model external events.”[1]

Kenneth Craik: “[...] our brains and minds are part of a continuous causal chain which includes the minds and brains of other men and it is senseless to stop short in tracing the springs of our ordinary, co-operative acts.”[2]

Warren McCulloch: “In 1943, Kenneth Craik published his little book called The Nature of Explanation, which I read five times before I realized why Einstein said it was a great book” […] “[Kenneth Craik’s] work has changed the course of British physiological psychology. It is close to cybernetics. Craik thought of our memory as a model of the world with us in it, which we update every tenth of a second for position, every two for velocity, and every three for acceleration as long as we are awake.”[3]

Assessments of the cybernetic era often stress that the key members of the cybernetic movement were engaged in military research during World War II, and it was in that context that the relevance of feedback and servomechanisms became paramount.[4] It is undoubtedly the case that any mobile servomechanism can be trained to scan and attack a target – just as any cybernetic tortoise or maze running mechanical rat can be fitted with a gun or bomb. The degree to which the “cybernetic moment” was built on an “ontology of war” cannot be underestimated, however it is equally important to recognise that the builders of the cybernetic devices studied in this text were – before and after the war – physiological psychologists leading in the field of brain research.[5] In this capacity they developed machines which were designed to read and affect the nervous system. The British cyberneticians Kenneth Craik, William Ross Ashby, and William Grey Walter were all physiological psychologists, convinced that machines could be built which model the mind and nervous system. Such machines could express mind at its most rudimentary level: as an organism which feeds information through its system and which affords adaptation to its immediate environment.

The cybernetic moment might be seen, therefore, as a meeting of

a) the contingencies of war

b) the conception of mind as coextensive to the system it inhabits.

It is after WWII that these two discourses overlap and the principle of a weapon seeking a target is translated to the principle of an organism seeking purpose. This meeting point was articulated by Kenneth Craik in the early 1940s.[6]

The implication arising from the premise that an organism seeking purpose can be modelled by a machine, is that the discourse of the organism finds its equivalence in the discourse of the machine. In the previous chapters I argued that this conflation did not arrive with the moment of cybernetics, Samuel Butler had been amongst those who had recognised the implications of the advent of the “vapour engine”; Alfred Russel Wallis had observed that the operations of the self-regulating steam engine and natural selection were principally the same. Long before the advent of cybernetics, the experimental behaviourist Clark Hull, a builder of “thinking machines”, maintained: “it should be a matter of no great difficulty to construct parallel inanimate mechanisms […] which will genuinely manifest the qualities of intelligence, insight, and purpose, and which will insofar be truly psychic”.[7] The premise of organism-machine equivalence was well established in research cultures of experimental psychology before cybernetics arrived on the scene.

The group of three British physiological psychologist-cyberneticians, William Ross Ashby, Kenneth Craik, and William Grey Walter[8] were all hands-on engineers, and all three were in the forefront of experimental brain research. As brain researchers, they sought to extend Pavlov’s conditioned reflex through experimentation with servomechanisms. Grey Walter and Craik both worked at the conditioned reflex laboratory at Cambridge, where Craik was based, and Craik was a frequent visitor to the Burden Neurological Institute, Bristol, where Grey Walter was the director. Here Craik collaborated with Grey Walter, using the EEG machine which Walter had developed to research the brain’s scanning function in relation to its production of particular brain waves (“alpha waves”). It was Craik who first suggested the relation between scanning and feedback to Grey Walter and encouraged the construction of a servomechanism which would combine the elements of scansion and feedback. This resulted in the Machina Specularix, or Cybernetic Tortoise (1947), which I will describe in some detail soon.

In this chapter we will examine two texts by Craik: The unfinished The Mechanism of Human Action (Cc. 1943) posthumously published in The Nature of Psychology(1966), and The Nature of Explanation (1943). In The Mechanism of Human Action Craik outlines the conditions necessary to build a cybernetic creature which behaves like an animal, In the second text,The Nature of Explanation Craik sets out his philosophical position, which (in line with Samuel Butler), understood mind as a function of matter (and not an entity outside of the material realm).

CRAIK'S CONTEXT

Kenneth Craik died in a cycling accident in 1945 at the age of 31. A selection of Craik’s papers and essays The Nature of Psychology (1966) was published years after his death.[9] His philosophical work The Nature of Explanation (1943) was however published in his lifetime, as was his influential paper Theory of the Human Operator in Control Systems (1943). Craik's work anticipated many of the key ideas in relation to negative feedback and purpose which would later be discussed in Norbert Wiener’s Cybernetics and in the Macy conferences on cybernetics in New York (1946-1953).

In 1947, following a visit to the Burden Neurological Institute by Norbert Wiener, Gray Walter noted:

“We had a visit yesterday from a Professor Wiener, from Boston. I met him over there last winter and found his views somewhat difficult to absorb, but he represents quite a large group in the States… These people are thinking on very much the same lines as Kenneth Craik did, but with much less sparkle and humour.”[10]

Despite the differences in style identified by Gray Walter, there is remarkable similarity between Wiener and Craik’s respective approaches. Both derived their work on feedback systems from their war-time work on anti-aircraft predictors and both extended their war work to find application in universal principles of organisation, which is why Craik has been referred to as “the English Norbert Wiener”.[11]

During WWII Craik had built and designed a simulation cockpit, in which he tested the pilots’ ability to function; their adaptation to different light conditions and the effect of fatigue on mental acuity (Craik's main study was the psychology of visual perception). From this work he concluded that the pilot in the cockpit works in a similar way to a servo-machine. Just as a servo is able to correct "misalignment" through cyclical action, so does the human being.[12] Like Wiener, Craik was able to extend these principles into a more speculative, philosophical realm.

In The Nature of Explanation Craik argues for a hylozoist conception of mind and consciousness, which is to say that there is no distinction between mind and matter. To underline the interconnectedness between mind and matter (hylozoism), Craik argues for a particular conception of causation and for a particular function for the model as a form of imminent analogy. Central to Craik’s argument is that model making is constitutive of mind, that an essential quality of neural machinery is that it expresses the capacity to model external events.[13] I will investigate The Nature of Explanation in greater detail later in this chapter, but first I'll take time to examine The Mechanism of Human Action, which provides a blueprint, or instruction manual, for the cybernetic menagerie that will be built after Craik's death.

THE MECHANISMS OF HUMAN ACTION

In The Mechanism of Human Action, Craik outlines the conditions needed to build a cybernetic creature which models the attributes of a living organism. Such a machine, Craik made clear, requires input to cycle in long and short feedback loops. This balance of “qualitative” and “quantitive” feedback responses regulate the system. The machine, as it cycles feedback, is trying to “correct a misalignment” and with each circuit get closer to the goal; which means that with each circuit the probability of success increases. Craik takes the reader through different scales in which the feedback mechanism operates, first establishing the continuity between biological regulation and nervous regulation and providing a precise definition of how a biological feedback system can be transferred to feedback machines. Craik outlines how each level of homeostasis is embedded in the other, establishing levels of abstraction which run from basic regulation; to nervous regulation; to learned response.[14] At a fundamental level, the circular, cumulative, action of the machine-organism corresponded to the on-off function of a neurone, which McCulloch & Pitts theorise in their paper A Logical Calculus of the Ideas Immanent in Nervous Activity(1943),[15] in which they argue that this action is essentially probabilistic because a selection is made on the basis of the previous action.

As Craik looks forward to the cybernetic future, he also surveys the past. In The Mechanism of Human Action, Craik argues the issue of design and purpose in similar terms to Samuel Butler in Evolution Old and New (1879).[16] Craik constructs his argument with similar tools to Butler: Evolution cannot be regulated by chance or by purposeful design (Craik, like Butler, favours specific diversity). Both recognise the structural equivalence between feedback machines and organisms. Both recognise that the machine with feedback follows a different teleological logic to the machine without. Therefore, a redefinition of purpose is required. Craik, like Butler, recognises that homeostasis in environment and organism are homological (they are structurally similar at different scales); an organism, an ecology, and a feedback system have the same structural principle, even if they do not resemble each other. It follows that it is possible to build a machine that has little physical resemblance to the brain or organism it models, and yet still embody the same structural principle. We note here that cybernetic creatures soon to be built – such as Grey Walter's tortoise and Ross Ashby's homeostat – bear little resemblance to the systems they modelled. Indeed, the cybernetic creatures embodied a set of principles that could be seen in a number of systems at different scales (from an organism to an environment).

In The Mechanism of Human Action, Craik sets out a road map for the cyberneticians who would shortly follow him. Although the terminology would differ – “dynamic equilibrium” was Craik’s preferred term over the more precise “homeostasis”; “cyclical action” would be replaced by “cybernetics” – all the elements are in Craik’s writings to describe an epistemology that Wiener, McCulloch, and Bateson would recognise.

In The Mechanism of Human Action (Cc.1943) Craik first sites “dynamic equilibrium”[17] as the regulator of both servomechanism and organisms. Here, the “stable state” is regulated by the use of external energy, which is drawn on to correct any instability;[18] Craik characterises this expenditure of energy as “down hill”.

Craik: “Living organisms were, with few exceptions, the first devices to use a downhill reaction – such as the combustion of carbohydrates – to provide them with a store of energy by which to drive a few uphill reactions for their own benefit. This does not mean, of course, that the living organisms live contrary to the second law of thermodynamics and can prevent the gradual degeneration of energy; it merely means that they have means of storing external energy in potential form for driving some local uphill reaction. If we consider the whole picture, the reaction is, as far as we know always downhill on average; but some parts of it may go uphill by virtue of the energy derived from other parts.”[19]

The basic, down to earth, principles for Craik’s mechanism-organism are:

- The organism or servomechanism stores energy taken from the outside – this can be food converted to glucose and carried through the system of an organism, or the energy stored in the battery of the servomechanism.

- the controlled liberation of energy (which takes advantage of “downhill” when expending energy).

To approach a “common sense” definition of life– requires:

(a) a sensory device for detecting and countering disturbance,

(b) a computing device for determining the right kind of response,

(c) an effector or motor mechanism for making the response.[20]

Craik next considers the role of negative feedback in the organisation of his machine-organism's behaviour and proposes his own adaptive model. His theoretical machine is simply a rod attached to a table which would resist any attempt to push it over. It would contain a “sensory element” such as a pendulum which would be actuated when the rod is pushed from the vertical. The misalignment controls the motor, “which runs in the appropriate direction until the misalignment has decreased to zero.” [21] Craik's hypothetical model demonstrates how negative feedback establishes stability in the organism and machine [22] and that this is in common with any “automatic regulating or stability restoring machine” [23]. Craik goes on to extend this into the biological realm. From a biological point of view negative feedback implies “that it is modifying its behaviour (that is the motion of its motor) as a result of its own previous behaviour ; if that behaviour has restored equilibrium, and thus has been successful, the machine will stop, otherwise it will go on trying.”[24]

So far Craik's machine is not capable of “spontaneous” activity - for this to happen positive feedback would have to come into play. Craik introduces the figure of the maze running rat, who is encouraged by negative feedback to avoid the electrified walls of its maze and is forced forward by positive feedback. Craik suggests such exploratory behaviour may be cumulative. Here Craik links positive feedback with a principle of pleasure, which will direct us toward the cybernetic creatures of the future – Grey Walter's Tortoise and Ross Ashby's Homeostat, for example – and to Lacan's fascination with machines which are capable of homeostasis. Craik goes on:

“Thus we may suggest that successful and pleasant activities tend, in some way to exert positive feedback and to be cumulative; and although, of course, we do not know where the function of the pleasure easier enters we could make a machine with positive feedback which would continue to perform and increase any activity, by whatever slight random cause it had been initiated, provided it did not endanger the stability and equilibrium of the machine; this would give more appearance of spontaneity.”[25]

Craik goes on to explain how a future machine could be regulated by long and short feedback loops, engendering a type of mechanical learning which is analogous to “response modification” or “selective learning”. Craik distinguishes these forms of learning from “sign”learning, which he regarded as the highest form of conditioning. In sign learning “one stimulus comes to suggest the probable presence of another”.[26] The most famous of these stimulus response reactions are Pavlov's dogs, which salivated at the sound of a bell which they associated with food. I will outline in the next chapter that it was precisely this response that Grey Walter was trying to engineer in his CORA (COnditioned Reflex Analogue), an adapted version of his cybernetic tortoise which Grey Walter demonstrated at the Paris Cybernetic Congress of 1951.

To assist the conceptualisation of a learning machine Craik draws on the American behaviourist Clark Hull’s “conditioned reflex models”. Clark Hull had compiled a series of “idea books” (1929-52) in which he devised mechanical and electro-chemical automata which worked on the Pavlovian principle of trial and error conditioning (Pavlov’s Conditioned Reflexes was published in English translation in 1927). Hull also derived his own mathematical system to describe the operations of these automata and wanted to apply the rules of the conditioned reflex as computational units within his machines. Hull aimed to build a conditioned reflex machine that would demonstrate intelligence through contact with its environment. The devices were subjected to a “combination of excitory and inhibitory procedures and would exhibit an array of known conditioning phenomena”. Meaning, the machines would “learn” to overcome obstacles in order to achieve a particular goal. Hull methodically analysed how instances of purpose and insight could be derived from the simple interaction of elementarily conditioned habits. “I feel” stated Hull, “that all forms of action, including the highest forms of intelligent and reflective action and thought, can be handled from purely materialistic and mechanistic standpoints.”[27]

Craik points out that machines are able to show more adaptability and “purposiveness” than one is apt to consider possible “from the study of, gramophones, clocks, automatic lathes” which lack feedback.[28] He outlines that more will be learned through continued experimentation and Craik sites two experiments that show the way forward, one by Brader (1937) and the second by Clark Hull (1943).

In Bradner's model a device learns to run to the shorter arm of a T-maze provided it is replaced at the start of the maze immediately after each attempt. Failure to place the machine at the start immediately gives the creature time to “forget” as its response is effected by thermal resistance. Craik describes this as a form of selection: “what really happens is that the machine is arranged to forget, and is given a long time to forget its unsuccessful efforts and very little time to remember its more successful efforts”. Bradner's experiments do demonstrate a feedback response, but the repeated placing and replacing of the device breaks any “biological” continuity. However, the T-maze device does affirm Craik's instinct that a cumulative information circuit may hold the key to a future behaving and thinking machine. The same is true of Clark Hull's experiment. In Principles of Behaviour[29] Hull gives an account of a conditioned reflex model in which the pressing of a morse key (stimulus) evokes a flashing light (response) which, because of frequent use, “high resistance” is reduced by use due to the frequent passage of current through the circuit.

While Kenneth Craik would not live to see the affirmation of his theories, that adaptation is afforded by long and short feedback loops in a circuit, or that the combination of principles of experimental psychology and WWII research into predictive servo-mechanisms would be married to produce the new discipline of cybernetics, his work and writing presented models for his contemporaries to follow, principally Ross Ashby and Grey Walter, whose Homeostat and Cybernetic Tortoise would build on his legacy.

THE NATURE OF EXPLANATION

In his philosophical work The Nature of Explanation (1943) Craik argues for an anti-cartesian, non-vitalistic hylozoism – which is to say that mind is a function of matter, rather than an essence opposing matter. It includes the chapter Hypothesis on the Nature of Thought. The chapter bears a striking resemblance to Samual Butler's argumentation in Evolution Old and New, in that both Butler and Craik hold the model as centrally important to the evolution and processes of thought. Both agree that the human ability to model dispenses with the need to go through many redundant generations before reaching the optimum design (as does the evolutionary process). Craik points out that the ship, the Queen Mary, is designed with a model in a tank, it would be folly to produce many generations of unseaworthy vessels before arriving at the one that is able to safely cross the Atlantic. But more importantly, a model might have a relation structure to the thing it models, which means it does not resemble the thing it models but parallels its behaviour which is translated into the terms of the original object. Craik gives the example of Kelvin's tide predictor, which bears no physical resemblance to the tides it predicts. The tide predictor is a series of pulleys which reproduce the oscillations at frequencies which resemble the variation in tide level at a give spot in essential respects.[30] Craik takes this idea of “relation structure” and applies it to Clark Hull's stimulus-response experiments, which bear a relation structure in essential respects to the things it models (in this case synaptic response to stimulus, the capacity to remember).[31]

Craik points out that we must adopt a very specific understanding of “analogy” when we make these parallels between the physical world and the devices that model it. “[T]he telephone exchange may resemble the nervous system in just the way I find important; but the central point is the principle underlining the similarity”.[32]

One could inventory the countless instances of when a model is not like the thing it parallels, what is significant is the principle which underlines the similarities; which is their tendency to organise stimulus into symbolic order. Why, Craik asks, does this tendency to make analogy actually exist? Humans have a tendency to see simple rules (relation structures) operating within complex systems because this reflects the structure of reality. Indeed, Craik goes on to suggest, we might consider that our brain models the world we inhabit because our brain parallels the systems that bring it into consciousness.[33] “The emergence of common principles and similarities is not so surprising if it is shown that all substance is composed of similar ultimate units...”[34]

For Craik, “Significance”, (signification) is an essential element of human experience. Significance is the relatedness of things. For Craik, “all propositions carry as it were the right to apply to something objectively real”.[35] Once one accepts the possibility of symbolism, one must accept that symbols can represent alternatives, which experiment decides between. Thus, experiment becomes the arbiter of what is the case. In this way justification of causality must be its trial by experiment.[36] Craik draws of the experimental hypothesis of Hermholtz, and supports the idea of “causal interaction in nature”. Admittedly, one cannot trace causation back to a first cause, but this is not a sufficient reason to doubt cause as such (as did Berkley and Hume). The model works in the same way as the thing it parallels, and in so doing it goes through the three phases of: translation, inference, and re-translation.

- translation of external process into symbols

- inference, which is the arrival at other symbols through reasoned deduction

- re-translation into external process (such as building and predicting).

Some machines demonstrate this triadic ability, such as anti-aircraft predictors and calculating machines. Craik again cites Clark Hull’s models, which respond to altered systems; from here Craik posits that an essential quality of neural machinery is that it expresses the capacity to model external events. The principle underlining the similarity of these machines is more important than superficial analogy. The brain works like a telephone exchange, sure, but the brain’s similarity to the telephone’s switching mechanism is one way to understand the operations of mind. The structural principle underlining the similarities is Craik’s triad of translation, inference and re-translation.[37]

Craik applies this triad to brain function as

- the translation of external events into neural patterns through the stimulation of the sense organs

- the interaction and stimulation of other neural patterns (association)

- the excitation of these effectors or motor organs.

Craik notes that to signify – to make a model in your head of something which could be actualized – has a close relation to entropy because signification involves the conservation of energy – it adds to the store of “down hill” actions. For Craik his own scheme “[…] would be a hylozoist rather than a materialistic scheme. It would attribute consciousness and conscious organisation to matter whenever it is physically organised in certain ways.” The machines Craik cites are an expression of mind, and expression of an ordering structure.

“My hypothesis then is that thought models, or parallels, reality – that its essential feature is not 'the mind', 'the self','sense-data', nor propositions but symbolism, and that this symbolism is largely of the same kind as that which is familiar to us in mechanical devices which aid thought and calculation.”[38]

Craik's notion of the model involves a very specific reading of the function of metaphor. Things, in their coming into being, mime the structures that run through reality. This is not to say that reality is an inert exterior object which is given significance (code is not written into or onto reality), but rather reality is emergent within the process of signifying itself. One makes a distinction and the difference establishes a relation. Thought, in the action of modelling, does not copy reality because “our internal model of reality can predict events which have nor occurred”. This predictive ability “saves time, expense and even life”[39] the ability to predict is “down hill” all the way – it is negentropic. It begins with the functions of homeostasis in the organism and extends to conscious purpose – this is the position adopted by British cyberneticians and endorsed by Gregory Bateson and Warren McCulloch.

The modelling of reality is part of a larger system, which introspective psychology and analytical philosophy have been unable to address. The degree of organisation which goes on at a non-conscious level is however evidenced in neuropathological experiments, in which purposive activity is highlighted. We generally pass over such activity as natural because greater mechanical complexity often leads to greater simplicity and coordination of performance, introspective psychology and analytical philosophy pays little attention to them as constitutive of thought (in Negative Entropy – Bateson - Freud I will discuss how Gregory Bateson and Warren McCulloch’s cybernetic critique of Freudianism was established on this premise).

For Craik the processes of reasoning are fundamentally no different from the mechanisms of physical nature itself. Neural mechanisms parallel the behaviour and interaction of physical objects. Such processes are suited to imitating the objective reality they are part of, in order to provide information which is not directly observable to the organism (to predict and to model).[40]

Craik compares the performance of an aeroplane with a pile of stones. The aeroplane is more complicated but its performance is more unified. If the parts of the aeroplane had been dropped into a bucket the atomic complexity would be high but the simplicity of performance would be nil.[41] Once the plane is built, however, there is an atomic and relational complexity which increases the possibilities of performance. The mind, in modelling reality, takes clues in perception – there is no obligation to decide whether such clues are conscious interpretations or automatic responses to reality which express atomic and relational complexity. For Craik “all perceptual and thinking processes are continuous with the workings of the external world and of the nervous system.” and there is no hard and fast line between involuntary actions and conscious thought. Craik now approaches a position close to Bateson’s, stressing the “continuity of man and his environment” […] “...our brains and minds are part of a continuous causal chain which includes the minds and brains of other men, and it is senseless to stop short in tracing the springs of our ordinary, co-operative acts”. Again Craik stresses that “man is part of a causally connected universe and his actions are part of the continuous interaction taking place.”[42]

The process of experimentation, and the operations of servo-mechanisms, are important for this generation of physiological-psychologist-cyberneticians because experimentation serves as the arbitrator of the model proposed by the processes of mind, and also because servomechanisms operate at the point where significance emerges. They provide models for a future stage of development, the conscious machine. This is the future that Samuel Butler had modeled from the vapour engine and the future that Grey Walter would go on to model in the cybernetic tortoise.

Experimentation with servomechanisms is, for Craik, Ashby, and Grey Walter, an expression of the more complex structures that underly them. In this respect Ross Ashby’s homeostat and Grey Walter’s tortoise are a result of the discourse of Kenneth Craik and of a particularly experimental, hylozoist approach to neuroscience and behaviour. This involved rigorous mathematical and philosophical theorisation alongside the construction of devices in the metal, alongside the analysis of what these machines express. If these creatures think, one might ask, how do they think? Perhaps, the machines themselves suggest – in their unconscious, indifferent relation to us, in their blind insistence on passing through our space, making demands on our matter – that we don’t actually think in the way we think we think.[43] Kenneth Craik left the path open for his colleagues Ross Ashby and Gray Walter to build actual performative models which fundamentally challenged what we think thinking is.

- ↑ E. Craik, The Nature of Explanation (1943)

- ↑ K. Craik, The Nature of Explanation (1943)

- ↑ Warren S. McCulloch, The Beginning of Cybernetics, Macy Conferences on Cybernetics, Vol. 2 p 345

- ↑ Geoff Bowker, How to be Universal: Some Cybernetic Strategies 1943-70 (1993); Peter Galison, The Ontology of the Enemy Norbert Wiener and the Cybernetic Vision (1994)

- ↑ The Cybernetic Brain, Sketches From Another Future, Andrew Pickering, University of Chicago press (2010)

- ↑ The term "the cybernetic moment" has been useful in contemporary discourse, see: The Cybernetics Moment, Or Why We Call Our Age the Information Age,Ronald R. Kline.| Note: I locate the beginnings of this "moment" at precisely this point where the discourses of WW II military research and physiological psychology come together to create a new "discourse network" of which McCulloch, Bateson, Kubie, and the British cyberneticians were a part. Here I use "discourse network" in the sense F. Kittler Discourse Network 1800-1900 would use it, that moment when the means of storage and transmission of information undergo a transformation.)

- ↑ Clark Hull. Idea Books 1929-

- ↑ Kenneth Craik’s wartime research included researching combatants’ performance in a simulation cockpit..

- ↑ Kenneth J. Craik, The Nature Of Psychology, a Selection of Papers, Essays and Other Writings, Stephen L.Sherwood ed. 1966, with Introduction by Warren McCulloch, Leo Verbeek and Stehen L. Sherwood.

- ↑ cite

- ↑ A. Pickering The Cybernetic Brain

- ↑ Craik obituary

- ↑ Craik The Nature of Explanation (1943)

- ↑ K. CraikThe Nature of Psychology; The Mechanism of Human Action (Cc.1943)

- ↑ A Logical Calculus of the Ideas Immanent in Nervous Activity by Warren McCulloch and Warren Pitts (1943)

- ↑ Samuel Butler Evolution Old and New (1879)

- ↑ Craik makes reference to Herbert Spencer and Pavlov in respect to the term "dynamic equilibrium"

- ↑ The Nature of Psychologyp.13

- ↑ K.Craik, The Mechanism of Human Action, The Nature of Psychology (1943) p14; note: Here Craik is speaking within the discourse of “dynamic equilibrium” – which, in itself, does not necessitate homeostasis, although homeostasis in a system is an expression of equilibrium. Craik also uses negative feedback as the regulator of the mechanism-organanism.

- ↑ Craik The Nature of Psychology (1943) Cambridge p14

- ↑ Kenneth CraikThe Nature of Psychologyp.16

- ↑ The Nature of Psychologyp.15-16

- ↑ p16

- ↑ K. CraikThe Nature of Psychologyp.16 Note: If Craik's model is meant to do as little as possible, to simply establish its ability to restore itself to maximum degree of stability (inaction), it is very similar in principle to Ross Ashby's Homeostat. See Steps to a (media) Ecology. Craik's rod is also an illustration of Lacan's reading of the death drive in Freud's Beyond the Pleasure Principle- see The Tortoise and Homeostasis

- ↑ Craik The Mechanisms of Human Action in The Nature of Psychology (1943)p 17